Reducing Variance Caused by Communication in Decentralized Multi-agent Deep Reinforcement Learning

Paper and Code

Feb 10, 2025

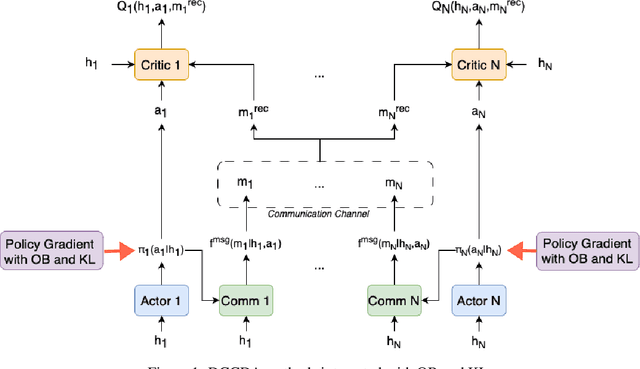

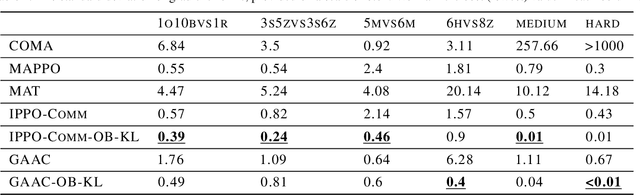

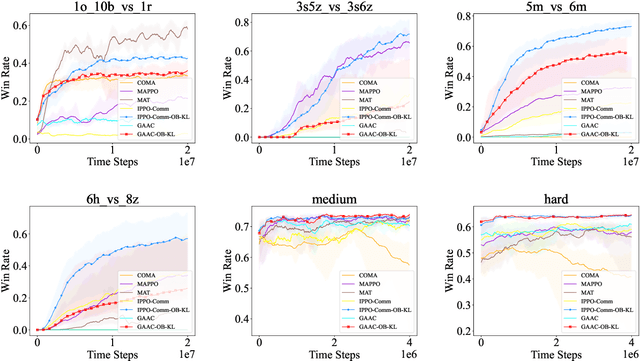

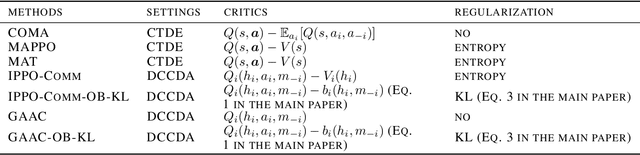

In decentralized multi-agent deep reinforcement learning (MADRL), communication can help agents to gain a better understanding of the environment to better coordinate their behaviors. Nevertheless, communication may involve uncertainty, which potentially introduces variance to the learning of decentralized agents. In this paper, we focus on a specific decentralized MADRL setting with communication and conduct a theoretical analysis to study the variance that is caused by communication in policy gradients. We propose modular techniques to reduce the variance in policy gradients during training. We adopt our modular techniques into two existing algorithms for decentralized MADRL with communication and evaluate them on multiple tasks in the StarCraft Multi-Agent Challenge and Traffic Junction domains. The results show that decentralized MADRL communication methods extended with our proposed techniques not only achieve high-performing agents but also reduce variance in policy gradients during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge