Redirected Walking in Static and Dynamic Scenes Using Visibility Polygons

Paper and Code

Jun 21, 2021

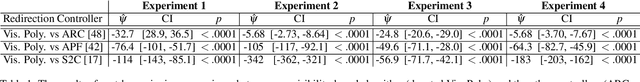

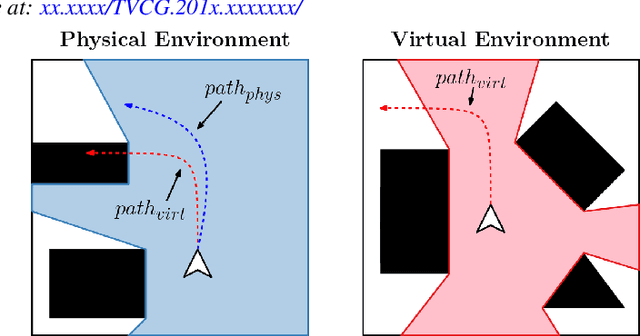

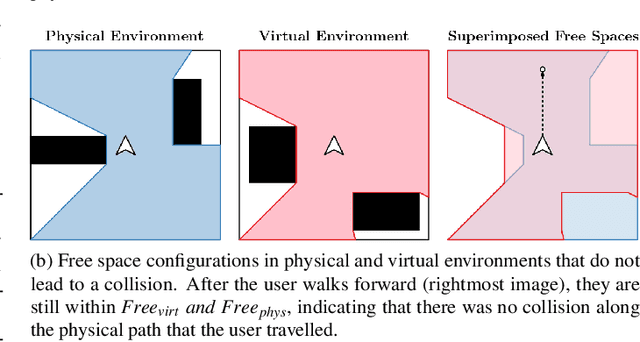

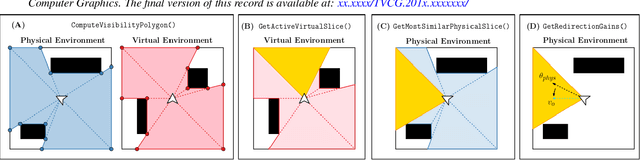

We present a new approach for redirected walking in static and dynamic scenes that uses techniques from robot motion planning to compute the redirection gains that steer the user on collision-free paths in the physical space. Our first contribution is a mathematical framework for redirected walking using concepts from motion planning and configuration spaces. This framework highlights various geometric and perceptual constraints that tend to make collision-free redirected walking difficult. We use our framework to propose an efficient solution to the redirection problem that uses the notion of visibility polygons to compute the free spaces in the physical environment and the virtual environment. The visibility polygon provides a concise representation of the entire space that is visible, and therefore walkable, to the user from their position within an environment. Using this representation of walkable space, we apply redirected walking to steer the user to regions of the visibility polygon in the physical environment that closely match the region that the user occupies in the visibility polygon in the virtual environment. We show that our algorithm is able to steer the user along paths that result in significantly fewer red{resets than existing state-of-the-art algorithms in both static and dynamic scenes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge