Recurrent Parameter Generators

Paper and Code

Jul 15, 2021

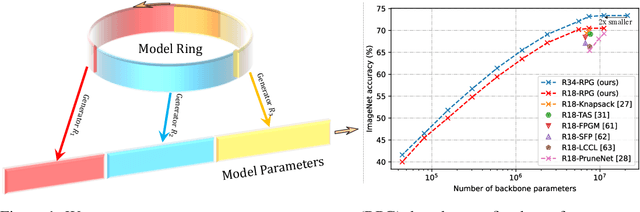

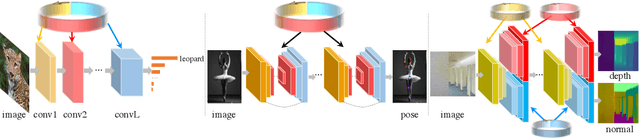

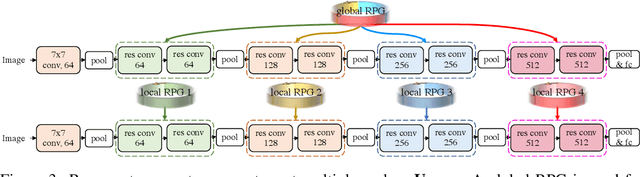

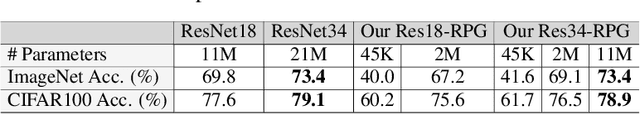

We present a generic method for recurrently using the same parameters for many different convolution layers to build a deep network. Specifically, for a network, we create a recurrent parameter generator (RPG), from which the parameters of each convolution layer are generated. Though using recurrent models to build a deep convolutional neural network (CNN) is not entirely new, our method achieves significant performance gain compared to the existing works. We demonstrate how to build a one-layer neural network to achieve similar performance compared to other traditional CNN models on various applications and datasets. Such a method allows us to build an arbitrarily complex neural network with any amount of parameters. For example, we build a ResNet34 with model parameters reduced by more than $400$ times, which still achieves $41.6\%$ ImageNet top-1 accuracy. Furthermore, we demonstrate the RPG can be applied at different scales, such as layers, blocks, or even sub-networks. Specifically, we use the RPG to build a ResNet18 network with the number of weights equivalent to one convolutional layer of a conventional ResNet and show this model can achieve $67.2\%$ ImageNet top-1 accuracy. The proposed method can be viewed as an inverse approach to model compression. Rather than removing the unused parameters from a large model, it aims to squeeze more information into a small number of parameters. Extensive experiment results are provided to demonstrate the power of the proposed recurrent parameter generator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge