Recurrent circuits as multi-path ensembles for modeling responses of early visual cortical neurons

Paper and Code

Oct 02, 2021

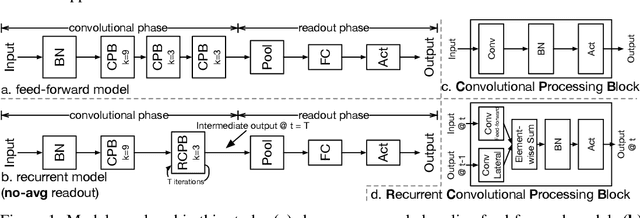

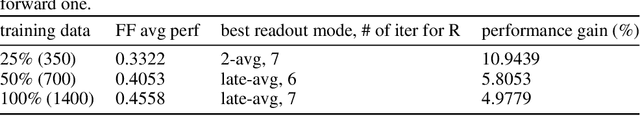

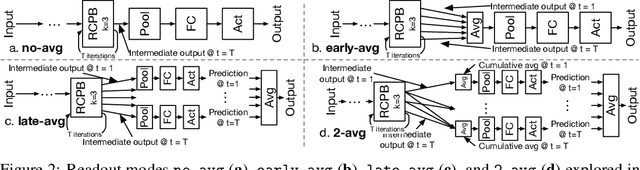

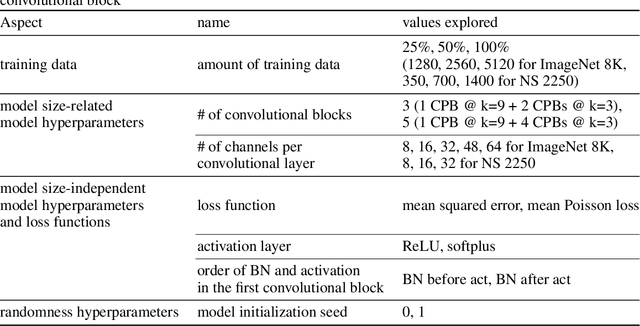

In this paper, we showed that adding within-layer recurrent connections to feed-forward neural network models could improve the performance of neural response prediction in early visual areas by up to 11 percent across different data sets and over tens of thousands of model configurations. To understand why recurrent models perform better, we propose that recurrent computation can be conceptualized as an ensemble of multiple feed-forward pathways of different lengths with shared parameters. By reformulating a recurrent model as a multi-path model and analyzing the recurrent model through its multi-path ensemble, we found that the recurrent model outperformed the corresponding feed-forward one due to the former's compact and implicit multi-path ensemble that allows approximating the complex function underlying recurrent biological circuits with efficiency. In addition, we found that the performance differences among recurrent models were highly correlated with the differences in their multi-path ensembles in terms of path lengths and path diversity; a balance of paths of different lengths in the ensemble was necessary for the model to achieve the best performance. Our studies shed light on the computational rationales and advantages of recurrent circuits for neural modeling and machine learning tasks in general.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge