Recomposition vs. Prediction: A Novel Anomaly Detection for Discrete Events Based On Autoencoder

Paper and Code

Dec 27, 2020

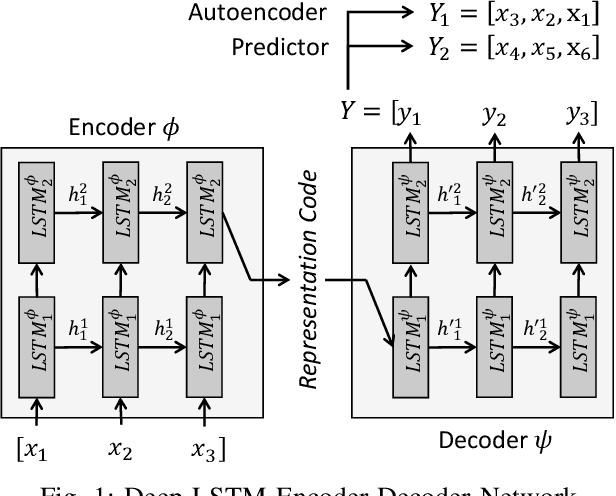

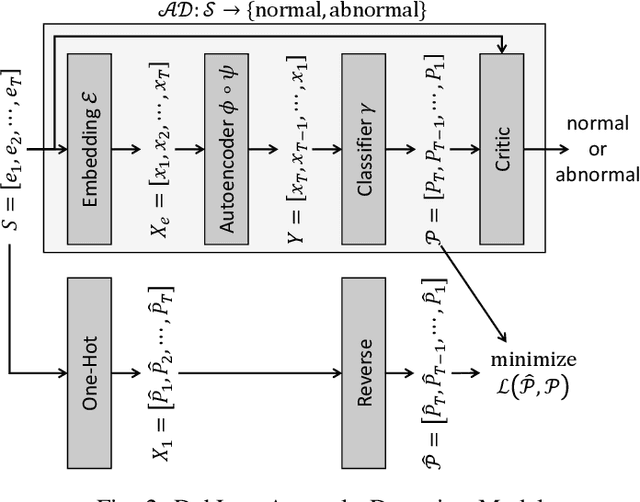

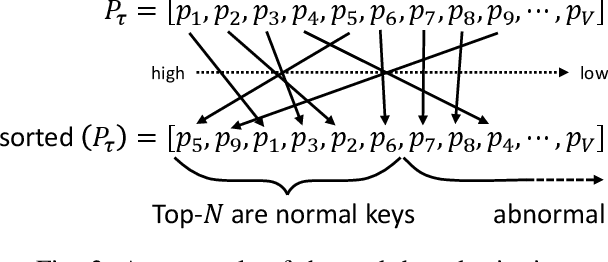

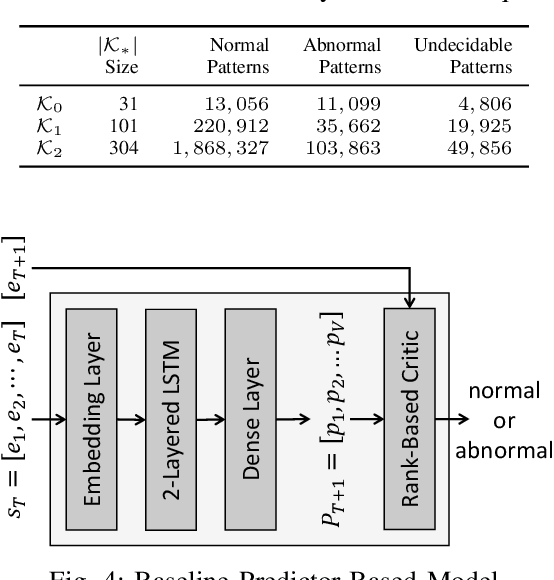

One of the most challenging problems in the field of intrusion detection is anomaly detection for discrete event logs. While most earlier work focused on applying unsupervised learning upon engineered features, most recent work has started to resolve this challenge by applying deep learning methodology to abstraction of discrete event entries. Inspired by natural language processing, LSTM-based anomaly detection models were proposed. They try to predict upcoming events, and raise an anomaly alert when a prediction fails to meet a certain criterion. However, such a predict-next-event methodology has a fundamental limitation: event predictions may not be able to fully exploit the distinctive characteristics of sequences. This limitation leads to high false positives (FPs) and high false negatives (FNs). It is also critical to examine the structure of sequences and the bi-directional causality among individual events. To this end, we propose a new methodology: Recomposing event sequences as anomaly detection. We propose DabLog, a Deep Autoencoder-Based anomaly detection method for discrete event Logs. The fundamental difference is that, rather than predicting upcoming events, our approach determines whether a sequence is normal or abnormal by analyzing (encoding) and reconstructing (decoding) the given sequence. Our evaluation results show that our new methodology can significantly reduce the numbers of FPs and FNs, hence achieving a higher $F_1$ score.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge