Recognizing Spatial Configurations of Objects with Graph Neural Networks

Paper and Code

Apr 09, 2020

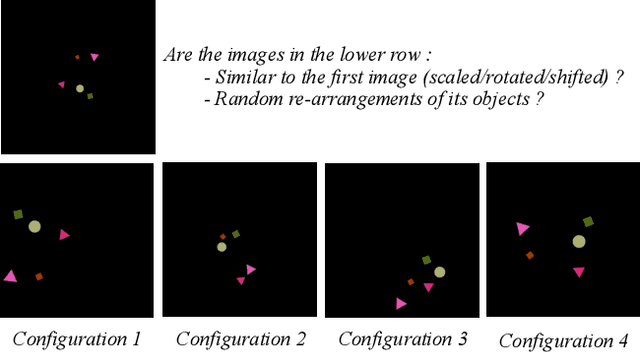

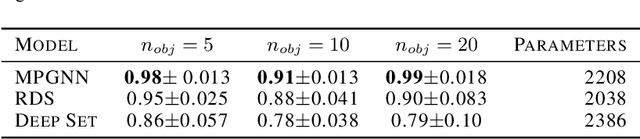

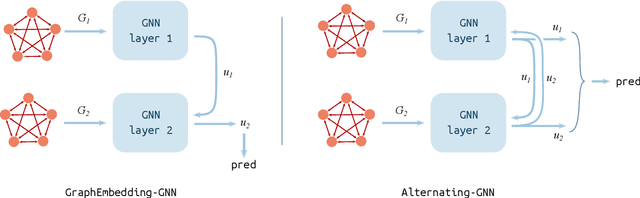

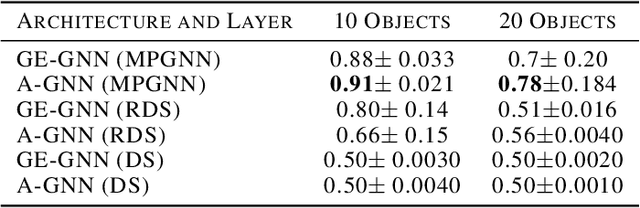

Deep learning algorithms can be seen as compositions of functions acting on learned representations encoded as tensor-structured data. However, in most applications those representations are monolithic, with for instance one single vector encoding an entire image or sentence. In this paper, we build upon the recent successes of Graph Neural Networks (GNNs) to explore the use of graph-structured representations for learning spatial configurations. Motivated by the ability of humans to distinguish arrangements of shapes, we introduce two novel geometrical reasoning tasks, for which we provide the datasets. We introduce novel GNN layers and architectures to solve the tasks and show that graph-structured representations are necessary for good performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge