Recognizing Activities and Spatial Context Using Wearable Sensors

Paper and Code

Jun 27, 2012

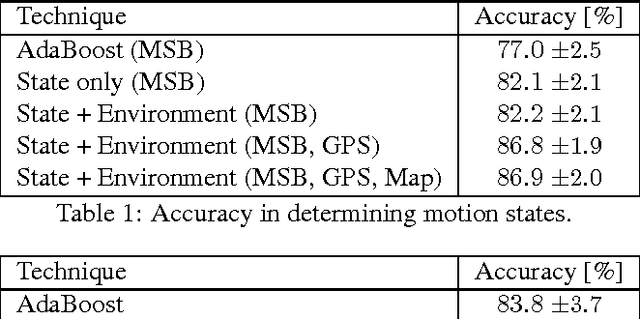

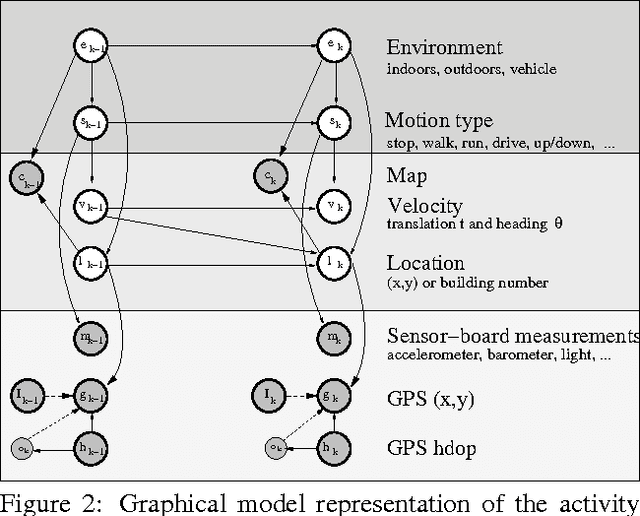

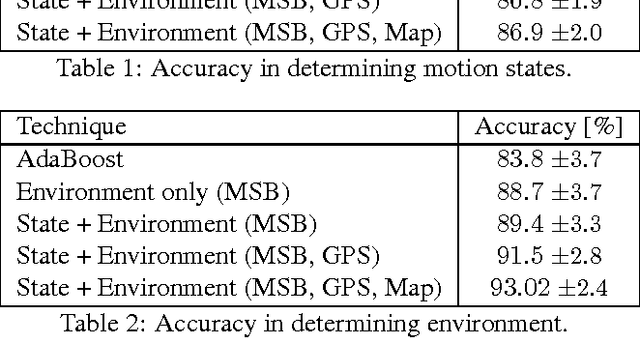

We introduce a new dynamic model with the capability of recognizing both activities that an individual is performing as well as where that ndividual is located. Our model is novel in that it utilizes a dynamic graphical model to jointly estimate both activity and spatial context over time based on the simultaneous use of asynchronous observations consisting of GPS measurements, and measurements from a small mountable sensor board. Joint inference is quite desirable as it has the ability to improve accuracy of the model. A key goal, however, in designing our overall system is to be able to perform accurate inference decisions while minimizing the amount of hardware an individual must wear. This minimization leads to greater comfort and flexibility, decreased power requirements and therefore increased battery life, and reduced cost. We show results indicating that our joint measurement model outperforms measurements from either the sensor board or GPS alone, using two types of probabilistic inference procedures, namely particle filtering and pruned exact inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge