RealPatch: A Statistical Matching Framework for Model Patching with Real Samples

Paper and Code

Aug 03, 2022

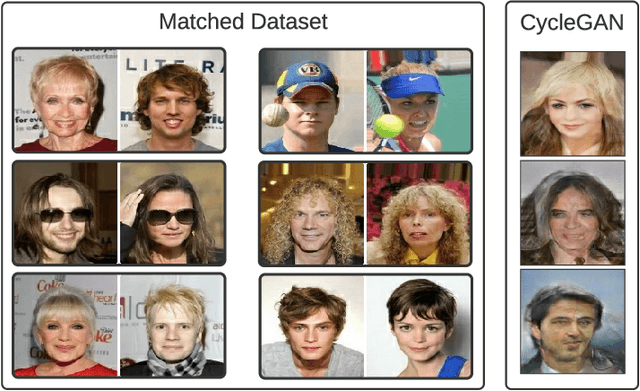

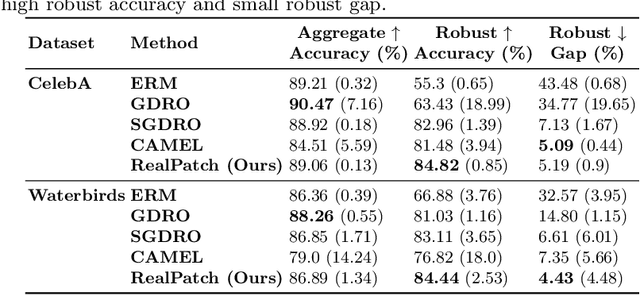

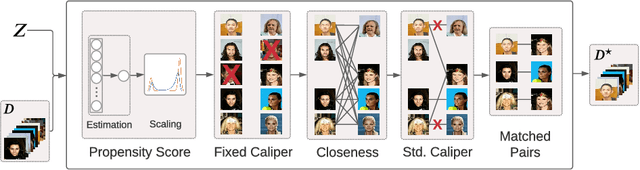

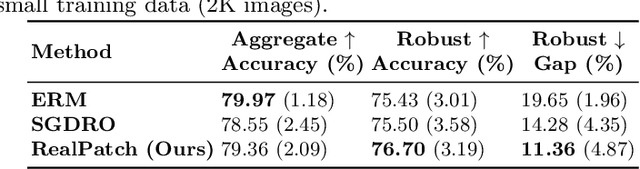

Machine learning classifiers are typically trained to minimise the average error across a dataset. Unfortunately, in practice, this process often exploits spurious correlations caused by subgroup imbalance within the training data, resulting in high average performance but highly variable performance across subgroups. Recent work to address this problem proposes model patching with CAMEL. This previous approach uses generative adversarial networks to perform intra-class inter-subgroup data augmentations, requiring (a) the training of a number of computationally expensive models and (b) sufficient quality of model's synthetic outputs for the given domain. In this work, we propose RealPatch, a framework for simpler, faster, and more data-efficient data augmentation based on statistical matching. Our framework performs model patching by augmenting a dataset with real samples, mitigating the need to train generative models for the target task. We demonstrate the effectiveness of RealPatch on three benchmark datasets, CelebA, Waterbirds and a subset of iWildCam, showing improvements in worst-case subgroup performance and in subgroup performance gap in binary classification. Furthermore, we conduct experiments with the imSitu dataset with 211 classes, a setting where generative model-based patching such as CAMEL is impractical. We show that RealPatch can successfully eliminate dataset leakage while reducing model leakage and maintaining high utility. The code for RealPatch can be found at https://github.com/wearepal/RealPatch.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge