Real-time 3-D Mapping with Estimating Acoustic Materials

Paper and Code

Sep 16, 2019

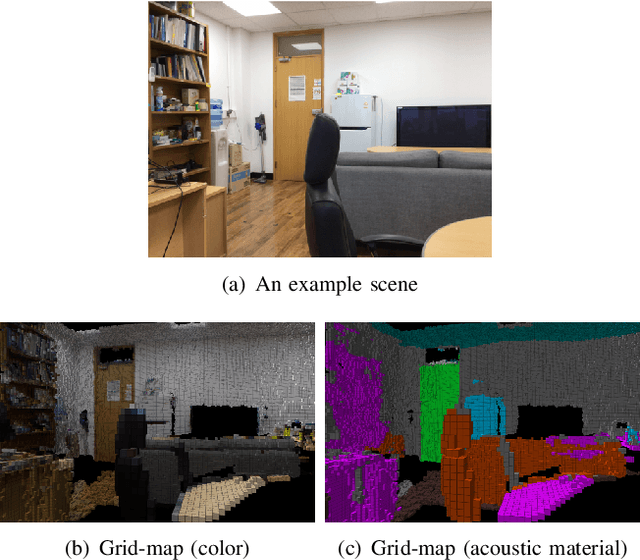

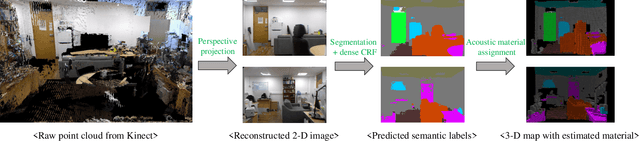

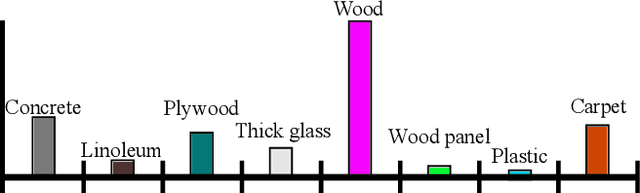

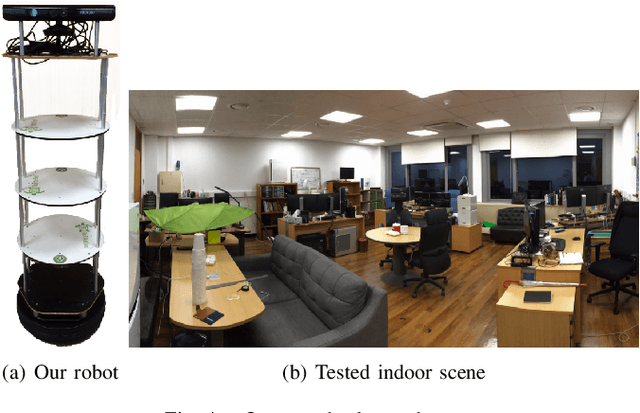

This paper proposes a real-time system integrating an acoustic material estimation from visual appearance and an on-the-fly mapping in the 3-dimension. The proposed method estimates the acoustic materials of surroundings in indoor scenes and incorporates them to a 3-D occupancy map, as a robot moves around the environment. To estimate the acoustic material from the visual cue, we apply the state-of-the-art semantic segmentation CNN network based on the assumption that the visual appearance and the acoustic materials have a strong association. Furthermore, we introduce an update policy to handle the material estimations during the online mapping process. As a result, our environment map with acoustic material can be used for sound-related robotics applications, such as sound source localization taking into account various acoustic propagation (e.g., reflection).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge