Real-centric Consistency Learning for Deepfake Detection

Paper and Code

May 15, 2022

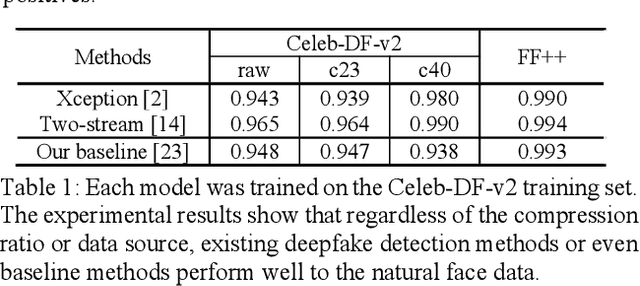

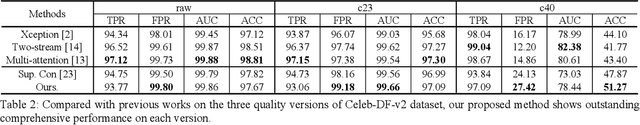

Most of previous deepfake detection researches bent their efforts to describe and discriminate artifacts in human perceptible ways, which leave a bias in the learned networks of ignoring some critical invariance features intra-class and underperforming the robustness of internet interference. Essentially, the target of deepfake detection problem is to represent natural faces and fake faces at the representation space discriminatively, and it reminds us whether we could optimize the feature extraction procedure at the representation space through constraining intra-class consistence and inter-class inconsistence to bring the intra-class representations close and push the inter-class representations apart? Therefore, inspired by contrastive representation learning, we tackle the deepfake detection problem through learning the invariant representations of both classes and propose a novel real-centric consistency learning method. We constraint the representation from both the sample level and the feature level. At the sample level, we take the procedure of deepfake synthesis into consideration and propose a novel forgery semantical-based pairing strategy to mine latent generation-related features. At the feature level, based on the centers of natural faces at the representation space, we design a hard positive mining and synthesizing method to simulate the potential marginal features. Besides, a hard negative fusion method is designed to improve the discrimination of negative marginal features with the help of supervised contrastive margin loss we developed. The effectiveness and robustness of the proposed method has been demonstrated through extensive experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge