RADIUM: Predicting and Repairing End-to-End Robot Failures using Gradient-Accelerated Sampling

Paper and Code

Apr 04, 2024

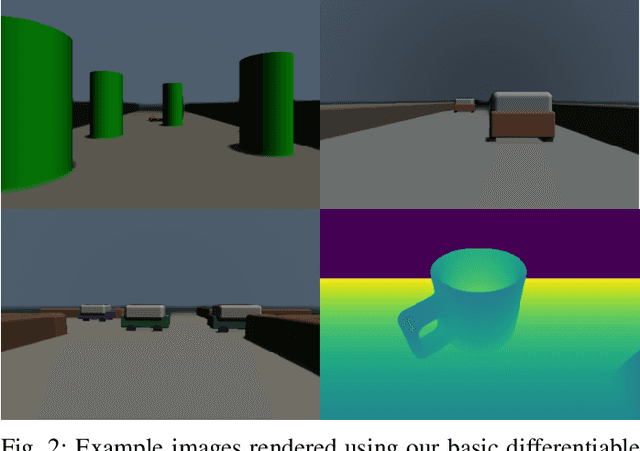

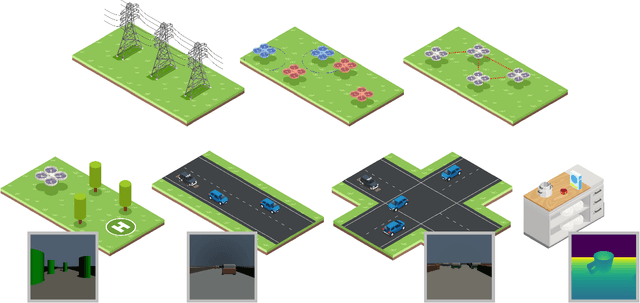

Before autonomous systems can be deployed in safety-critical applications, we must be able to understand and verify the safety of these systems. For cases where the risk or cost of real-world testing is prohibitive, we propose a simulation-based framework for a) predicting ways in which an autonomous system is likely to fail and b) automatically adjusting the system's design and control policy to preemptively mitigate those failures. Existing tools for failure prediction struggle to search over high-dimensional environmental parameters, cannot efficiently handle end-to-end testing for systems with vision in the loop, and provide little guidance on how to mitigate failures once they are discovered. We approach this problem through the lens of approximate Bayesian inference and use differentiable simulation and rendering for efficient failure case prediction and repair. For cases where a differentiable simulator is not available, we provide a gradient-free version of our algorithm, and we include a theoretical and empirical evaluation of the trade-offs between gradient-based and gradient-free methods. We apply our approach on a range of robotics and control problems, including optimizing search patterns for robot swarms, UAV formation control, and robust network control. Compared to optimization-based falsification methods, our method predicts a more diverse, representative set of failure modes, and we find that our use of differentiable simulation yields solutions that have up to 10x lower cost and requires up to 2x fewer iterations to converge relative to gradient-free techniques. In hardware experiments, we find that repairing control policies using our method leads to a 5x robustness improvement. Accompanying code and video can be found at https://mit-realm.github.io/radium/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge