Radial basis function kernel optimization for Support Vector Machine classifiers

Paper and Code

Jul 16, 2020

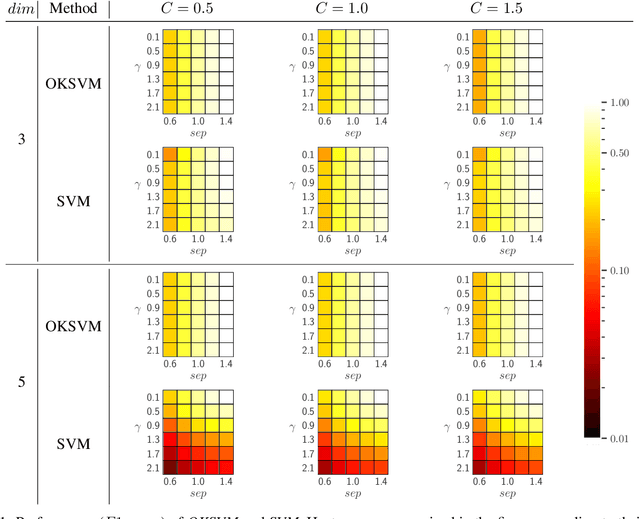

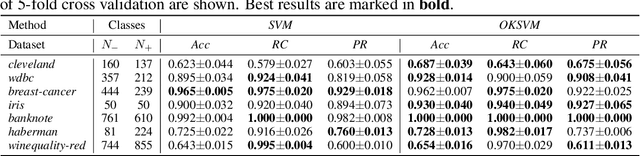

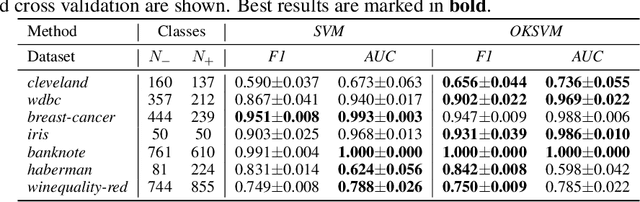

Support Vector Machines (SVMs) are still one of the most popular and precise classifiers. The Radial Basis Function (RBF) kernel has been used in SVMs to separate among classes with considerable success. However, there is an intrinsic dependence on the initial value of the kernel hyperparameter. In this work, we propose OKSVM, an algorithm that automatically learns the RBF kernel hyperparameter and adjusts the SVM weights simultaneously. The proposed optimization technique is based on a gradient descent method. We analyze the performance of our approach with respect to the classical SVM for classification on synthetic and real data. Experimental results show that OKSVM performs better irrespective of the initial values of the RBF hyperparameter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge