RAB: Provable Robustness Against Backdoor Attacks

Paper and Code

Mar 19, 2020

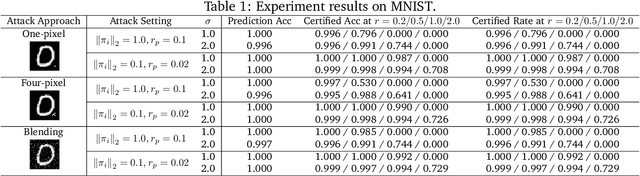

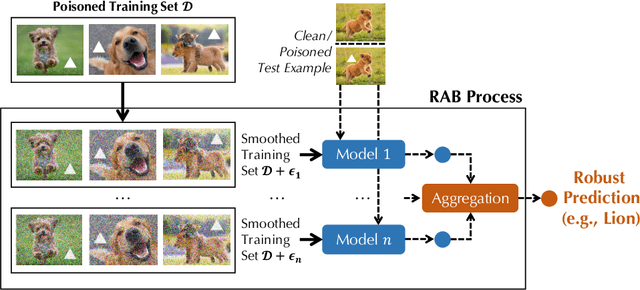

Recent studies have shown that deep neural networks (DNNs) are vulnerable to various attacks, including evasion attacks and poisoning attacks. On the defense side, there have been intensive interests in provable robustness against evasion attacks. In this paper, we focus on improving model robustness against more diverse threat models. Specifically, we provide the first unified framework using smoothing functional to certify the model robustness against general adversarial attacks. In particular, we propose the first robust training process RAB to certify against backdoor attacks. We theoretically prove the robustness bound for machine learning models based on the RAB training process, analyze the tightness of the robustness bound, as well as proposing different smoothing noise distributions such as Gaussian and Uniform distributions. Moreover, we evaluate the certified robustness of a family of "smoothed" DNNs which are trained in a differentially private fashion. In addition, we theoretically show that for simpler models such as K-nearest neighbor models, it is possible to train the robust smoothed models efficiently. For K=1, we propose an exact algorithm to smooth the training process, eliminating the need to sample from a noise distribution.Empirically, we conduct comprehensive experiments for different machine learning models such as DNNs, differentially private DNNs, and KNN models on MNIST, CIFAR-10 and ImageNet datasets to provide the first benchmark for certified robustness against backdoor attacks. In particular, we also evaluate KNN models on a spambase tabular dataset to demonstrate its advantages. Both the theoretic analysis for certified model robustness against arbitrary backdoors, and the comprehensive benchmark on diverse ML models and datasets would shed light on further robust learning strategies against training time or even general adversarial attacks on ML models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge