R-TOD: Real-Time Object Detector with Minimized End-to-End Delay for Autonomous Driving

Paper and Code

Oct 23, 2020

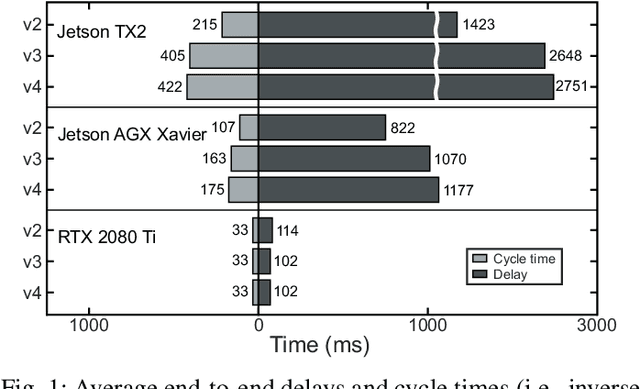

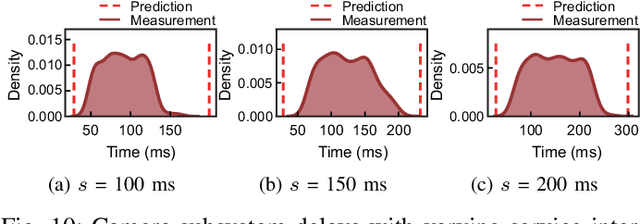

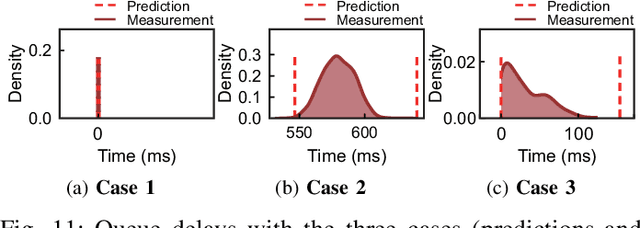

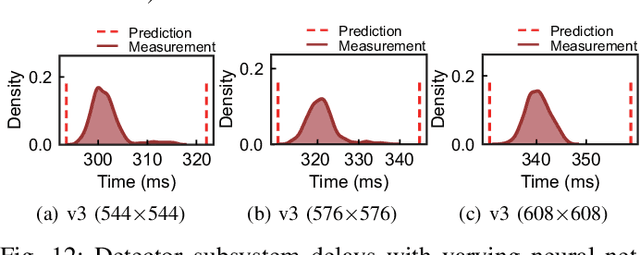

For realizing safe autonomous driving, the end-to-end delays of real-time object detection systems should be thoroughly analyzed and minimized. However, despite recent development of neural networks with minimized inference delays, surprisingly little attention has been paid to their end-to-end delays from an object's appearance until its detection is reported. With this motivation, this paper aims to provide more comprehensive understanding of the end-to-end delay, through which precise best- and worst-case delay predictions are formulated, and three optimization methods are implemented: (i) on-demand capture, (ii) zero-slack pipeline, and (iii) contention-free pipeline. Our experimental results show a 76% reduction in the end-to-end delay of Darknet YOLO (You Only Look Once) v3 (from 1070 ms to 261 ms), thereby demonstrating the great potential of exploiting the end-to-end delay analysis for autonomous driving. Furthermore, as we only modify the system architecture and do not change the neural network architecture itself, our approach incurs no penalty on the detection accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge