Query-Efficient GAN Based Black-Box Attack Against Sequence Based Machine and Deep Learning Classifiers

Paper and Code

Sep 22, 2018

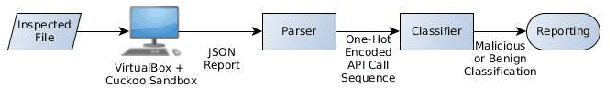

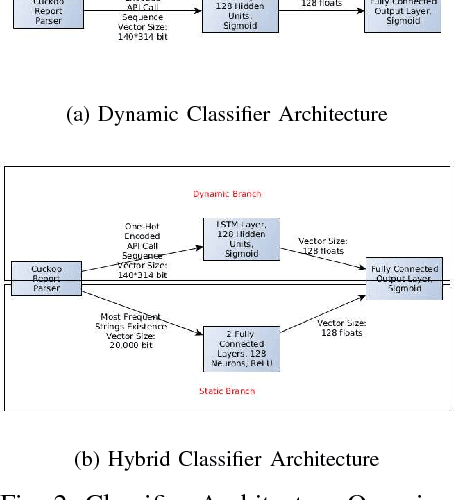

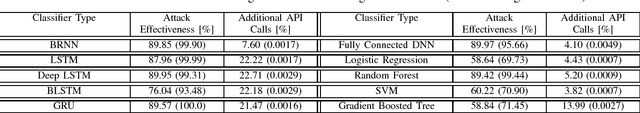

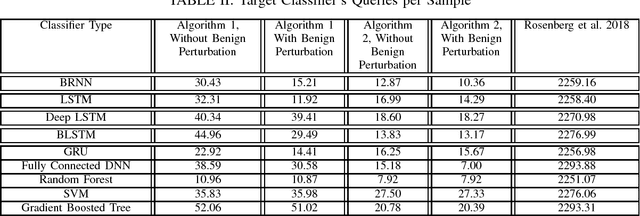

In this paper, we present a generic black-box attack, demonstrated against API call based machine learning malware classifiers. We generate adversarial examples combining sequences (API call sequences) and other features (e.g., printable strings) that will be misclassified by the classifier without affecting the malware functionality. Our attack minimizes the number of target classifier queries and only requires access to the predicted label of the attacked model (without the confidence level). We evaluate the attack's effectiveness against many classifiers such as RNN variants, DNN, SVM, GBDT, etc. We show that the attack requires fewer queries and less knowledge about the attacked model's architecture than other existing black-box attacks, making it optimal to attack cloud based models at a minimal cost. Finally, we discuss the robustness of this attack to existing defense mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge