Quantitative Analysis of Image Classification Techniques for Memory-Constrained Devices

Paper and Code

May 11, 2020

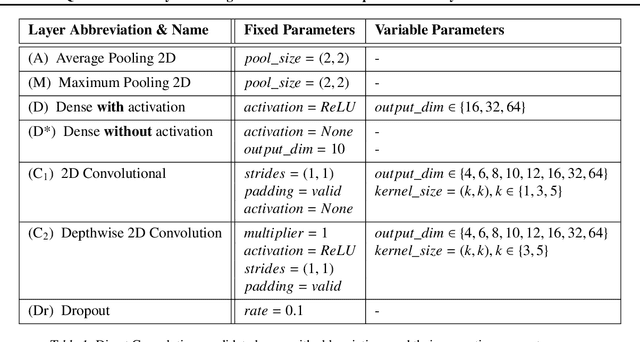

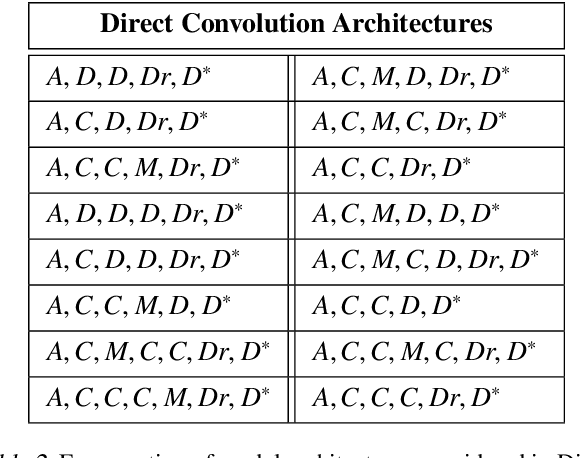

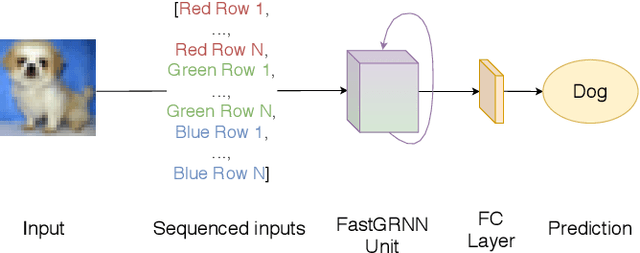

Convolutional Neural Networks, or CNNs, are undoubtedly the state of the art for image classification. However, they typically come with the cost of a large memory footprint. Recently, there has been significant progress in the field of image classification on memory-constrained devices, such as Arduino Unos, with novel contributions like the ProtoNN, Bonsai and FastGRNN models. These methods have been shown to perform excellently on tasks such as speech recognition or optical character recognition using MNIST, but their potential on more complex, multi-channel and multi-class image classification has yet to be determined. This paper presents a comprehensive analysis that shows that even in memory-constrained environments, CNNs implemented memory-optimally using Direct Convolutions outperform ProtoNN, Bonsai and FastGRNN models on 3-channel image classification using CIFAR-10. For our analysis, we propose new methods of adjusting the FastGRNN model to work with multi-channel images and then evaluate each algorithm with a memory size budget of 8KB, 16KB, 32KB, 64KB and 128KB to show quantitatively that CNNs are still state-of-the-art in image classification, even when memory size is constrained.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge