Quantifying the generalization error in deep learning in terms of data distribution and neural network smoothness

Paper and Code

May 27, 2019

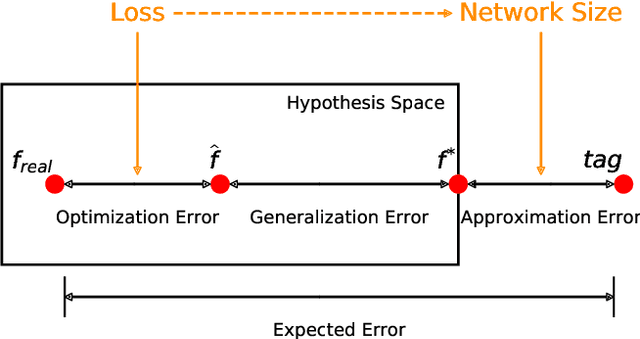

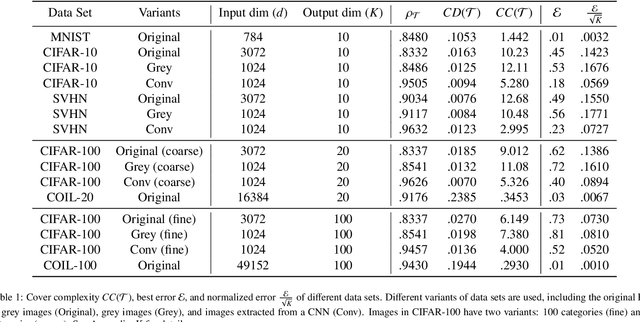

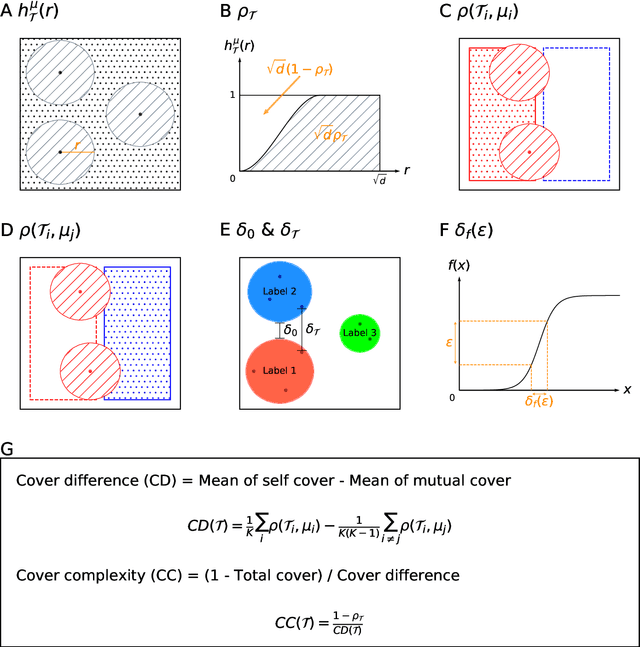

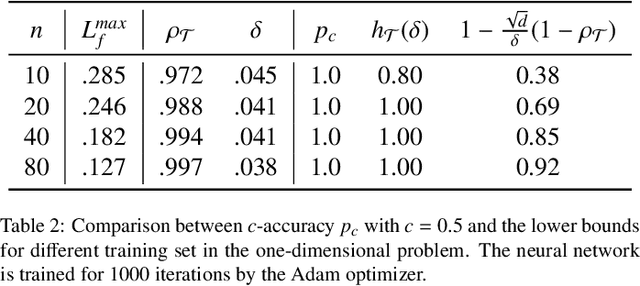

The accuracy of deep learning, i.e., deep neural networks, can be characterized by dividing the total error into three main types: approximation error, optimization error, and generalization error. Whereas there are some satisfactory answers to the problems of approximation and optimization, much less is known about the theory of generalization. Most existing theoretical works for generalization fail to explain the performance of neural networks in practice. To derive a meaningful bound, we study the generalization error of neural networks for classification problems in terms of data distribution and neural network smoothness. We introduce the cover complexity (CC) to measure the difficulty of learning a data set and the inverse of modules of continuity to quantify neural network smoothness. A quantitative bound for expected accuracy/error is derived by considering both the CC and neural network smoothness. We validate our theoretical results by several data sets of images. The numerical results verify that the expected error of trained networks scaled with the square root of the number of classes has a linear relationship with respect to the CC. In addition, we observe a clear consistency between test loss and neural network smoothness during the training process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge