Quantifying the Effects of Data Augmentation

Paper and Code

Feb 18, 2022

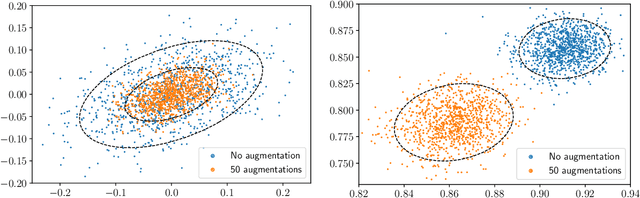

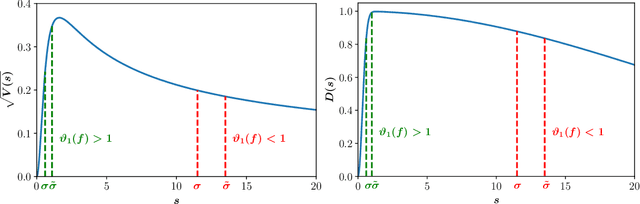

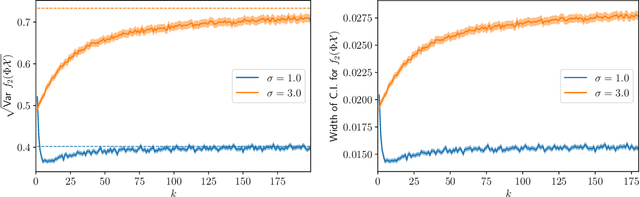

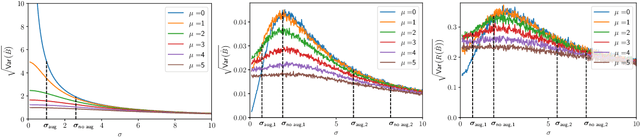

We provide results that exactly quantify how data augmentation affects the convergence rate and variance of estimates. They lead to some unexpected findings: Contrary to common intuition, data augmentation may increase rather than decrease uncertainty of estimates, such as the empirical prediction risk. Our main theoretical tool is a limit theorem for functions of randomly transformed, high-dimensional random vectors. The proof draws on work in probability on noise stability of functions of many variables. The pathological behavior we identify is not a consequence of complex models, but can occur even in the simplest settings -- one of our examples is a linear ridge regressor with two parameters. On the other hand, our results also show that data augmentation can have real, quantifiable benefits.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge