Pruning Coherent Integrated Photonic Neural Networks Using the Lottery Ticket Hypothesis

Paper and Code

Dec 14, 2021

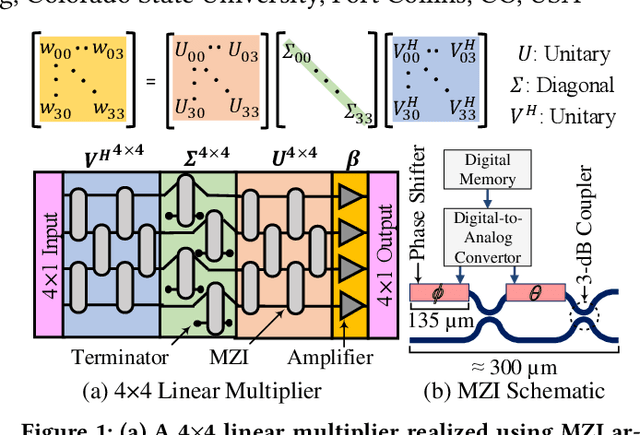

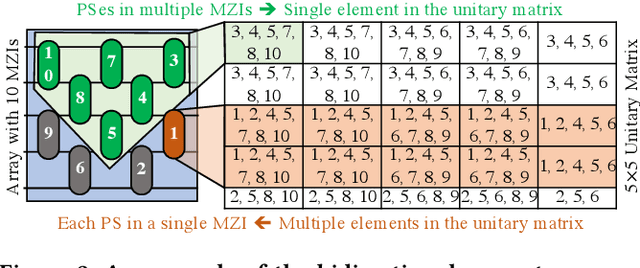

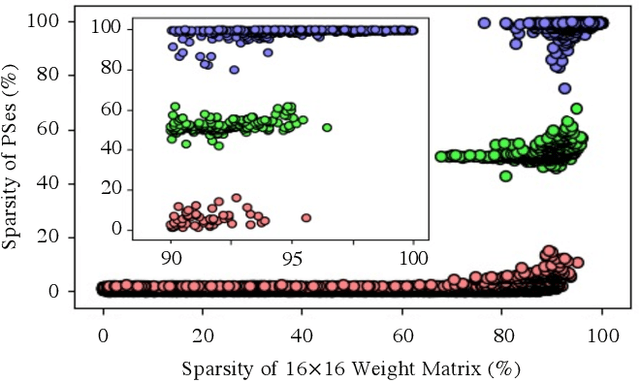

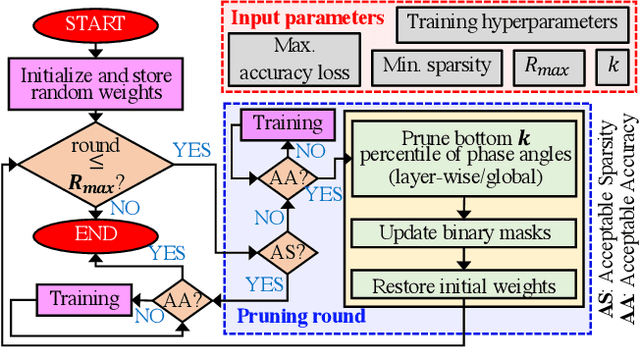

Singular-value-decomposition-based coherent integrated photonic neural networks (SC-IPNNs) have a large footprint, suffer from high static power consumption for training and inference, and cannot be pruned using conventional DNN pruning techniques. We leverage the lottery ticket hypothesis to propose the first hardware-aware pruning method for SC-IPNNs that alleviates these challenges by minimizing the number of weight parameters. We prune a multi-layer perceptron-based SC-IPNN and show that up to 89% of the phase angles, which correspond to weight parameters in SC-IPNNs, can be pruned with a negligible accuracy loss (smaller than 5%) while reducing the static power consumption by up to 86%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge