Provable More Data Hurt in High Dimensional Least Squares Estimator

Paper and Code

Aug 14, 2020

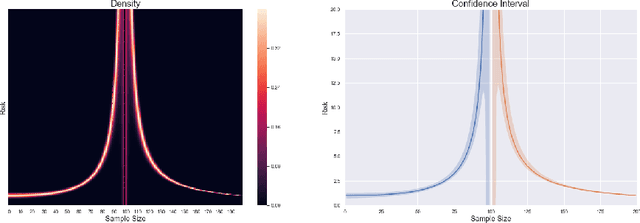

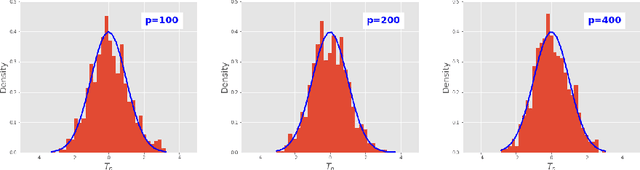

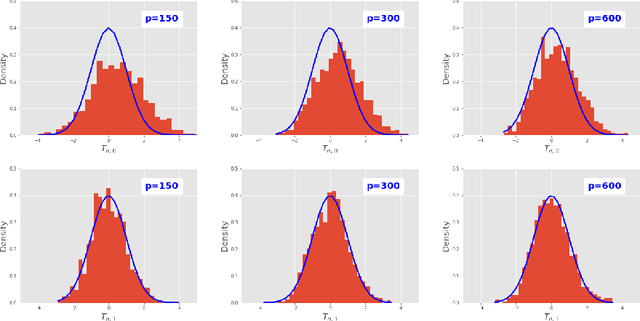

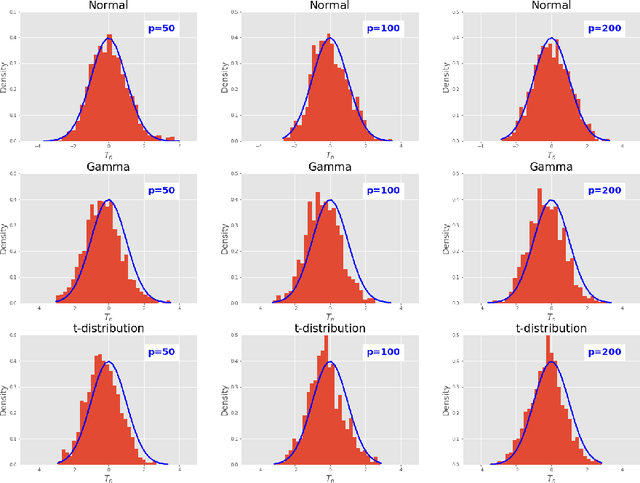

This paper investigates the finite-sample prediction risk of the high-dimensional least squares estimator. We derive the central limit theorem for the prediction risk when both the sample size and the number of features tend to infinity. Furthermore, the finite-sample distribution and the confidence interval of the prediction risk are provided. Our theoretical results demonstrate the sample-wise nonmonotonicity of the prediction risk and confirm "more data hurt" phenomenon.

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge