Prototype-based classifiers in the presence of concept drift: A modelling framework

Paper and Code

Mar 18, 2019

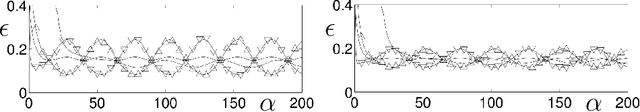

We present a modelling framework for the investigation of prototype-based classifiers in non-stationary environments. Specifically, we study Learning Vector Quantization (LVQ) systems trained from a stream of high-dimensional, clustered data.We consider standard winner-takes-all updates known as LVQ1. Statistical properties of the input data change on the time scale defined by the training process. We apply analytical methods borrowed from statistical physics which have been used earlier for the exact description of learning in stationary environments. The suggested framework facilitates the computation of learning curves in the presence of virtual and real concept drift. Here we focus on timedependent class bias in the training data. First results demonstrate that, while basic LVQ algorithms are suitable for the training in non-stationary environments, weight decay as an explicit mechanism of forgetting does not improve the performance under the considered drift processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge