ProtoMIL: Multiple Instance Learning with Prototypical Parts for Fine-Grained Interpretability

Paper and Code

Aug 24, 2021

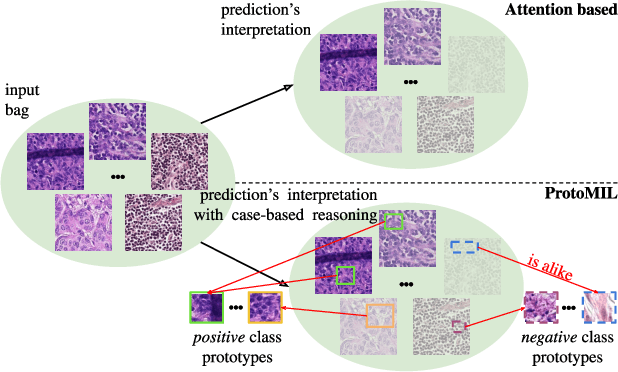

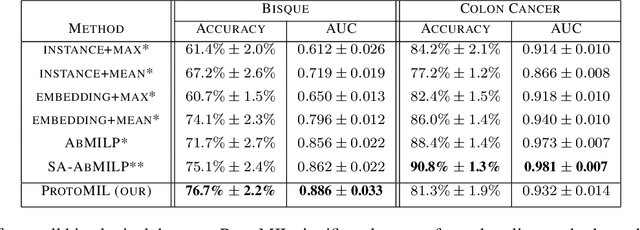

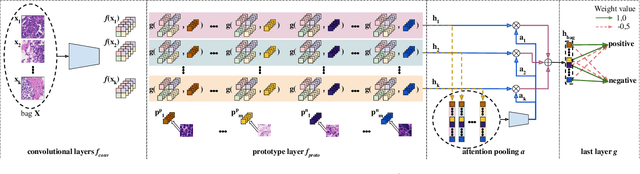

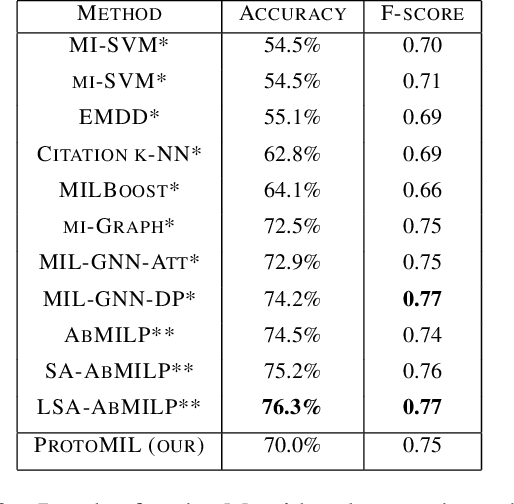

Multiple Instance Learning (MIL) gains popularity in many real-life machine learning applications due to its weakly supervised nature. However, the corresponding effort on explaining MIL lags behind, and it is usually limited to presenting instances of a bag that are crucial for a particular prediction. In this paper, we fill this gap by introducing ProtoMIL, a novel self-explainable MIL method inspired by the case-based reasoning process that operates on visual prototypes. Thanks to incorporating prototypical features into objects description, ProtoMIL unprecedentedly joins the model accuracy and fine-grained interpretability, which we present with the experiments on five recognized MIL datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge