Probing Model Signal-Awareness via Prediction-Preserving Input Minimization

Paper and Code

Nov 25, 2020

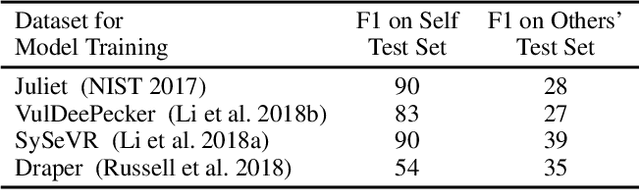

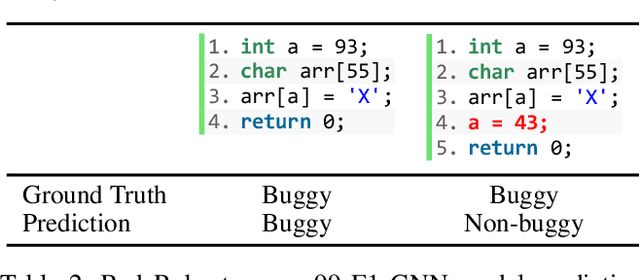

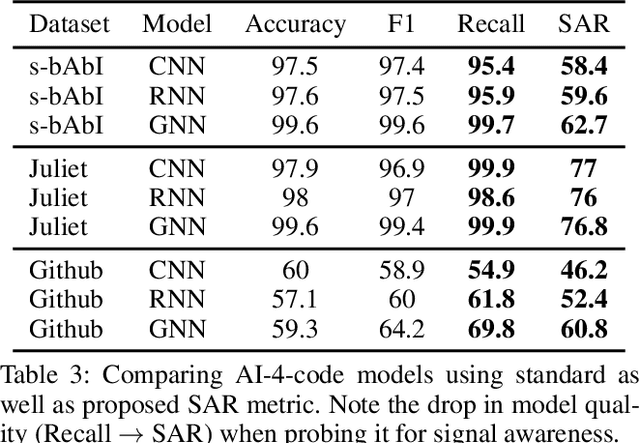

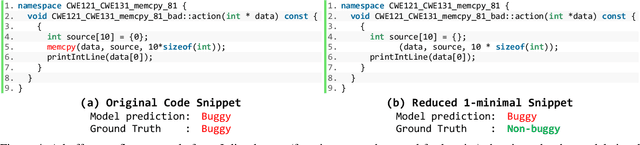

This work explores the signal awareness of AI models for source code understanding. Using a software vulnerability detection use-case, we evaluate the models' ability to capture the correct vulnerability signals to produce their predictions. Our prediction-preserving input minimization (P2IM) approach systematically reduces the original source code to a minimal snippet which a model needs to maintain its prediction. The model's reliance on incorrect signals is then uncovered when a vulnerability in the original code is missing in the minimal snippet, both of which the model however predicts as being vulnerable. We apply P2IM on three state-of-the-art neural network models across multiple datasets, and measure their signal awareness using a new metric we propose- Signal-aware Recall (SAR). The results show a sharp drop in the model's Recall from the high 90s to sub-60s with the new metric, highlighting that the models are presumably picking up a lot of noise or dataset nuances while learning their vulnerability detection logic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge