Probing and Fine-tuning Reading Comprehension Models for Few-shot Event Extraction

Paper and Code

Oct 21, 2020

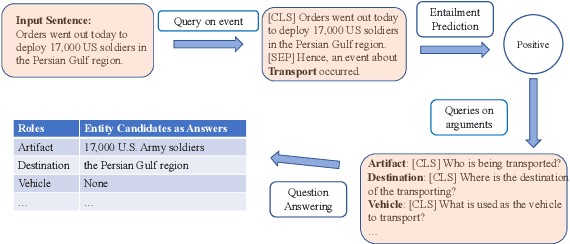

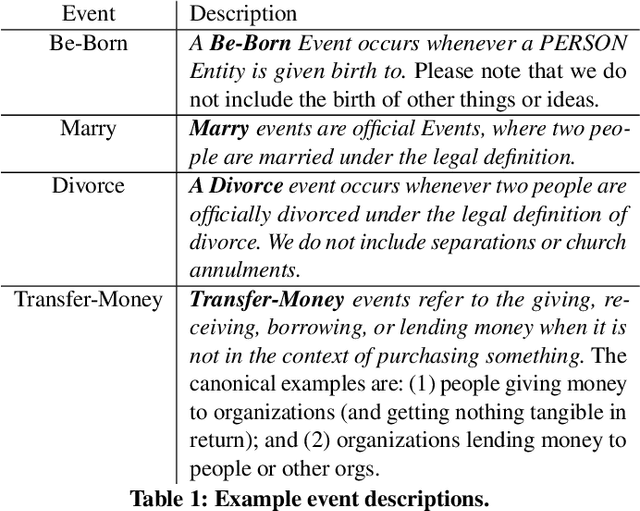

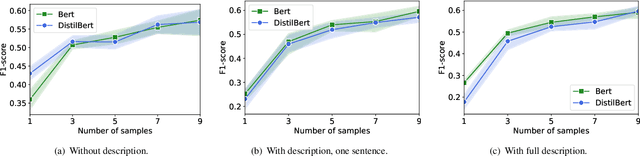

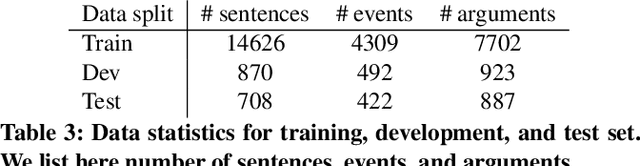

We study the problem of event extraction from text data, which requires both detecting target event types and their arguments. Typically, both the event detection and argument detection subtasks are formulated as supervised sequence labeling problems. We argue that the event extraction models so trained are inherently label-hungry, and can generalize poorly across domains and text genres.We propose a reading comprehension framework for event extraction.Specifically, we formulate event detection as a textual entailment prediction problem, and argument detection as a question answer-ing problem. By constructing proper query templates, our approach can effectively distill rich knowledge about tasks and label semantics from pretrained reading comprehension models. Moreover, our model can be fine-tuned with a small amount of data to boost its performance. Our experiment results show that our method performs strongly for zero-shot and few-shot event extraction, and it achieves state-of-the-art performance on the ACE 2005 benchmark when trained with full supervision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge