Proactive Schemes: A Survey of Adversarial Attacks for Social Good

Paper and Code

Sep 24, 2024

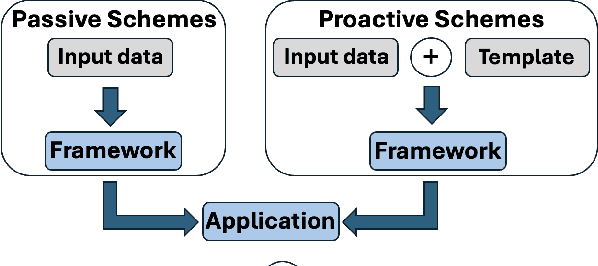

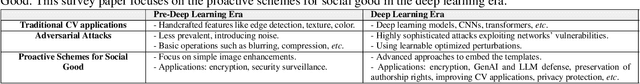

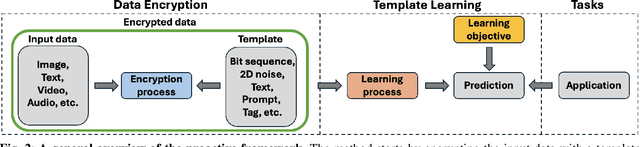

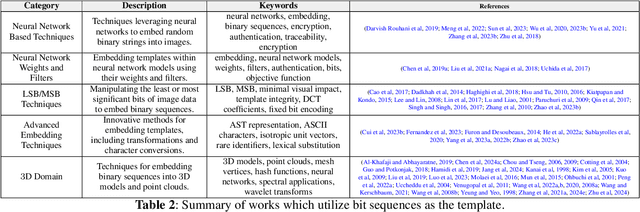

Adversarial attacks in computer vision exploit the vulnerabilities of machine learning models by introducing subtle perturbations to input data, often leading to incorrect predictions or classifications. These attacks have evolved in sophistication with the advent of deep learning, presenting significant challenges in critical applications, which can be harmful for society. However, there is also a rich line of research from a transformative perspective that leverages adversarial techniques for social good. Specifically, we examine the rise of proactive schemes-methods that encrypt input data using additional signals termed templates, to enhance the performance of deep learning models. By embedding these imperceptible templates into digital media, proactive schemes are applied across various applications, from simple image enhancements to complicated deep learning frameworks to aid performance, as compared to the passive schemes, which don't change the input data distribution for their framework. The survey delves into the methodologies behind these proactive schemes, the encryption and learning processes, and their application to modern computer vision and natural language processing applications. Additionally, it discusses the challenges, potential vulnerabilities, and future directions for proactive schemes, ultimately highlighting their potential to foster the responsible and secure advancement of deep learning technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge