Privacy-Utility Tradeoff of OLS with Random Projections

Paper and Code

Sep 03, 2023

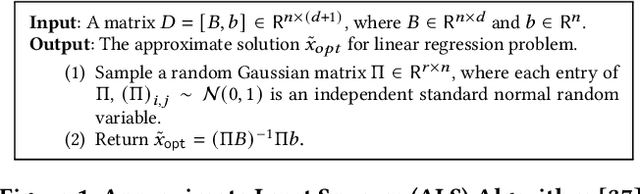

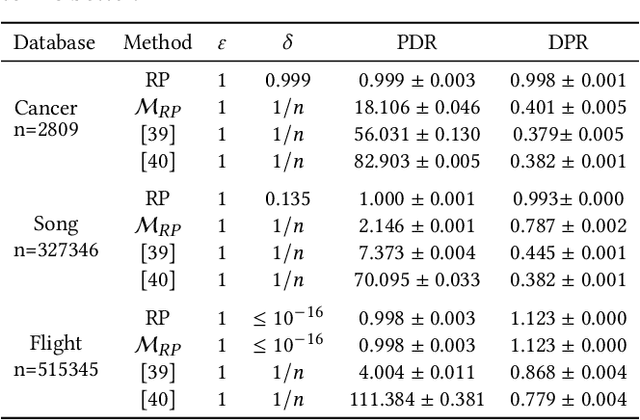

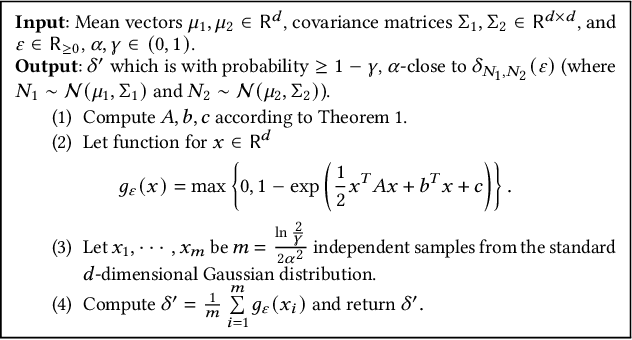

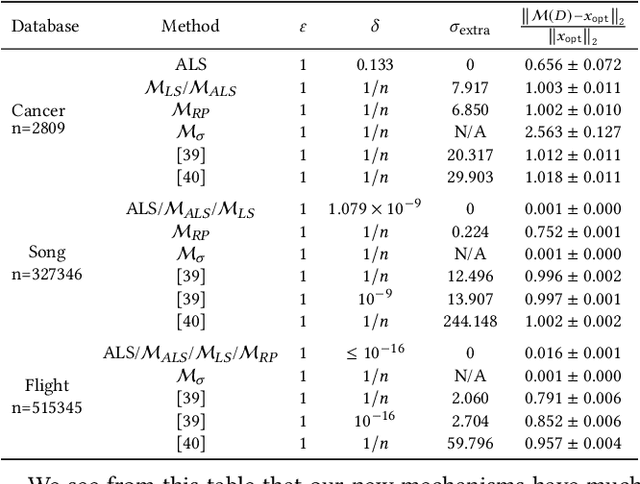

We study the differential privacy (DP) of a core ML problem, linear ordinary least squares (OLS), a.k.a. $\ell_2$-regression. Our key result is that the approximate LS algorithm (ALS) (Sarlos, 2006), a randomized solution to the OLS problem primarily used to improve performance on large datasets, also preserves privacy. ALS achieves a better privacy/utility tradeoff, without modifications or further noising, when compared to alternative private OLS algorithms which modify and/or noise OLS. We give the first {\em tight} DP-analysis for the ALS algorithm and the standard Gaussian mechanism (Dwork et al., 2014) applied to OLS. Our methodology directly improves the privacy analysis of (Blocki et al., 2012) and (Sheffet, 2019)) and introduces new tools which may be of independent interest: (1) the exact spectrum of $(\epsilon, \delta)$-DP parameters (``DP spectrum") for mechanisms whose output is a $d$-dimensional Gaussian, and (2) an improved DP spectrum for random projection (compared to (Blocki et al., 2012) and (Sheffet, 2019)). All methods for private OLS (including ours) assume, often implicitly, restrictions on the input database, such as bounds on leverage and residuals. We prove that such restrictions are necessary. Hence, computing the privacy of mechanisms such as ALS must estimate these database parameters, which can be infeasible in big datasets. For more complex ML models, DP bounds may not even be tractable. There is a need for blackbox DP-estimators (Lu et al., 2022) which empirically estimate a data-dependent privacy. We demonstrate the effectiveness of such a DP-estimator by empirically recovering a DP-spectrum that matches our theory for OLS. This validates the DP-estimator in a nontrivial ML application, opening the door to its use in more complex nonlinear ML settings where theory is unavailable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge