Privacy for Free: Communication-Efficient Learning with Differential Privacy Using Sketches

Paper and Code

Dec 06, 2019

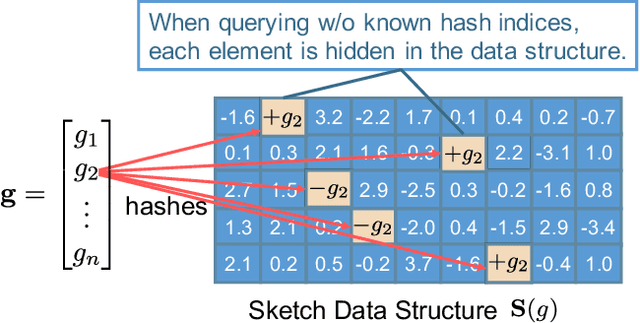

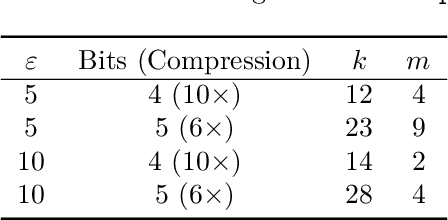

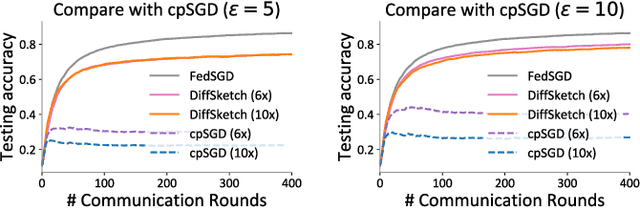

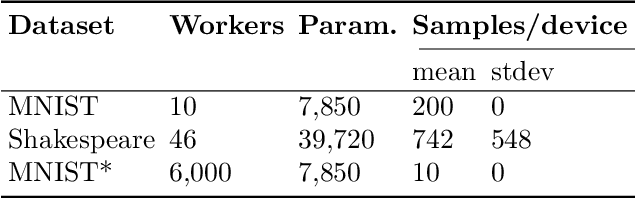

Communication and privacy are two critical concerns in distributed learning. Many existing works treat these concerns separately. In this work, we argue that a natural connection exists between methods for communication reduction and privacy preservation in the context of distributed machine learning. In particular, we prove that Count Sketch, a simple method for data stream summarization, has inherent differential privacy properties. Using these derived privacy guarantees, we propose a novel sketch-based framework (DiffSketch) for distributed learning, where we compress the transmitted messages via sketches to simultaneously achieve communication efficiency and provable privacy benefits. Our evaluation demonstrates that DiffSketch can provide strong differential privacy guarantees (e.g., $\varepsilon$= 1) and reduce communication by 20-50x with only marginal decreases in accuracy. Compared to baselines that treat privacy and communication separately, DiffSketch improves absolute test accuracy by 5%-50% while offering the same privacy guarantees and communication compression.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge