Prediction method of Soundscape Impressions using Environmental Sounds and Aerial Photographs

Paper and Code

Sep 09, 2022

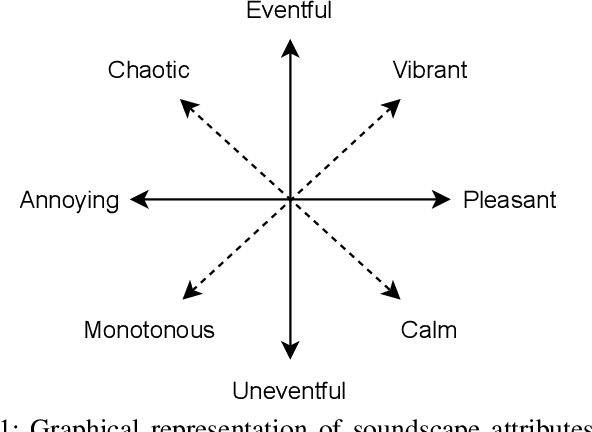

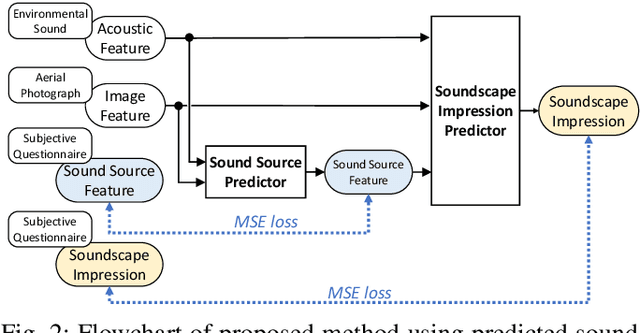

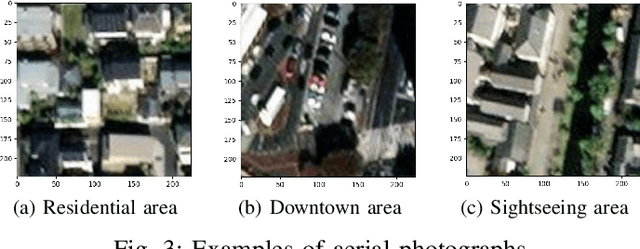

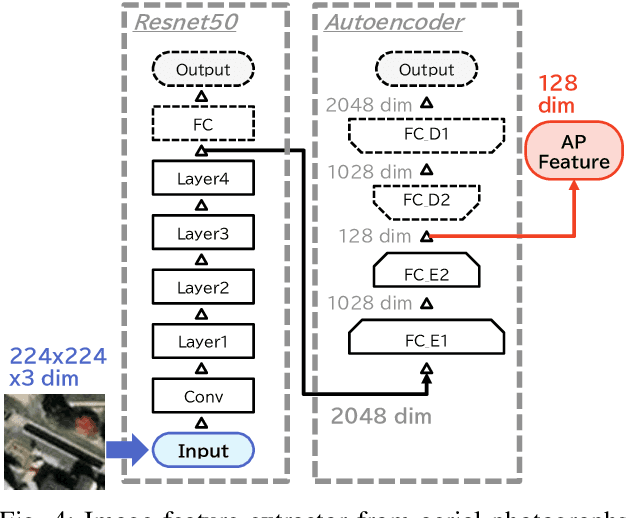

We investigate an method for quantifying city characteristics based on impressions of a sound environment. The quantification of the city characteristics will be beneficial to government policy planning, tourism projects, etc. In this study, we try to predict two soundscape impressions, meaning pleasantness and eventfulness, using sound data collected by the cloud-sensing method. The collected sounds comprise meta information of recording location using Global Positioning System. Furthermore, the soundscape impressions and sound-source features are separately assigned to the cloud-sensing sounds by assessments defined using Swedish Soundscape-Quality Protocol, assessing the quality of the acoustic environment. The prediction models are built using deep neural networks with multi-layer perceptron for the input of 10-second sound and the aerial photographs of its location. An acoustic feature comprises equivalent noise level and outputs of octave-band filters every second, and statistics of them in 10~s. An image feature is extracted from an aerial photograph using ResNet-50 and autoencoder architecture. We perform comparison experiments to demonstrate the benefit of each feature. As a result of the comparison, aerial photographs and sound-source features are efficient to predict impression information. Additionally, even if the sound-source features are predicted using acoustic and image features, the features also show fine results to predict the soundscape impression close to the result of oracle sound-source features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge