PredCoin: Defense against Query-based Hard-label Attack

Paper and Code

Feb 04, 2021

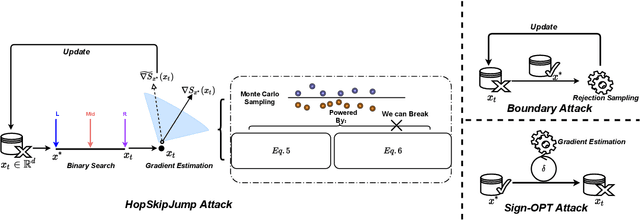

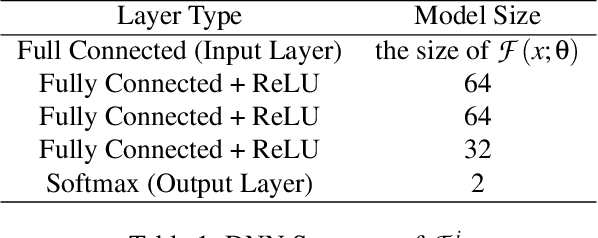

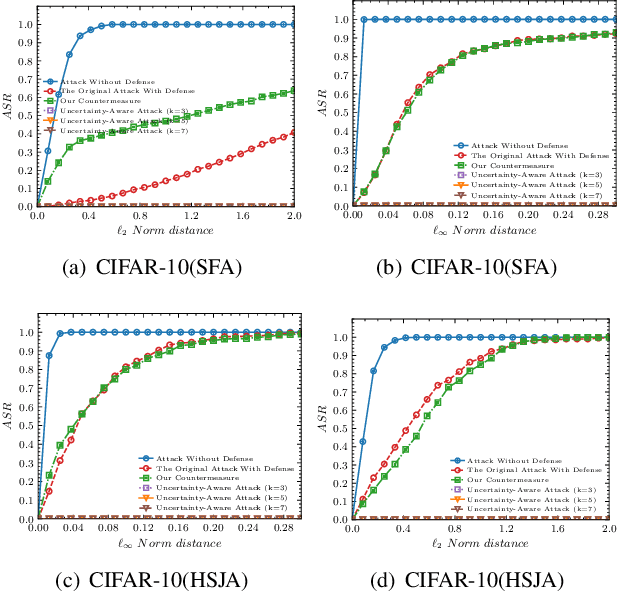

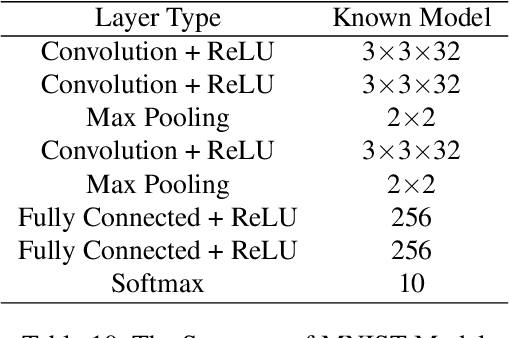

Many adversarial attacks and defenses have recently been proposed for Deep Neural Networks (DNNs). While most of them are in the white-box setting, which is impractical, a new class of query-based hard-label (QBHL) black-box attacks pose a significant threat to real-world applications (e.g., Google Cloud, Tencent API). Till now, there has been no generalizable and practical approach proposed to defend against such attacks. This paper proposes and evaluates PredCoin, a practical and generalizable method for providing robustness against QBHL attacks. PredCoin poisons the gradient estimation step, an essential component of most QBHL attacks. PredCoin successfully identifies gradient estimation queries crafted by an attacker and introduces uncertainty to the output. Extensive experiments show that PredCoin successfully defends against four state-of-the-art QBHL attacks across various settings and tasks while preserving the target model's overall accuracy. PredCoin is also shown to be robust and effective against several defense-aware attacks, which may have full knowledge regarding the internal mechanisms of PredCoin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge