Precipitation Nowcasting Using Diffusion Transformer with Causal Attention

Paper and Code

Oct 17, 2024

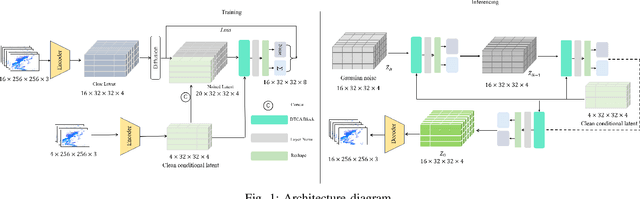

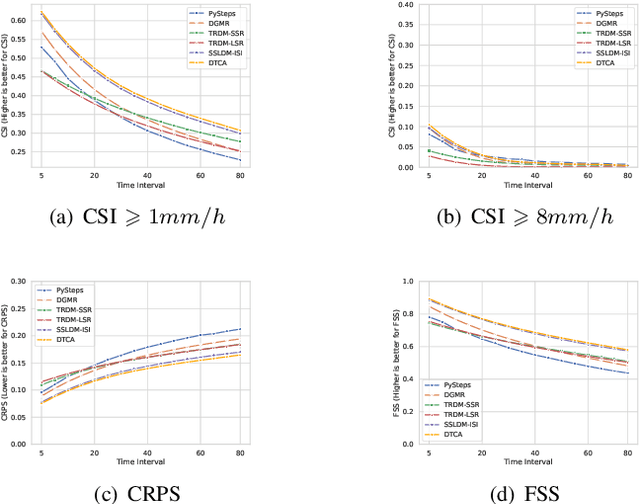

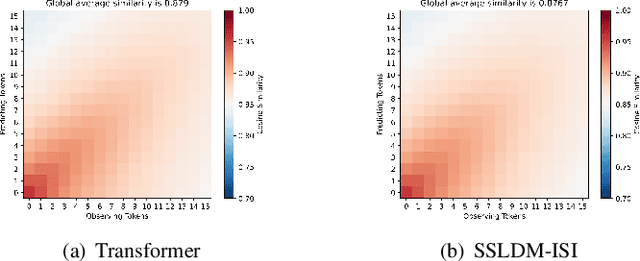

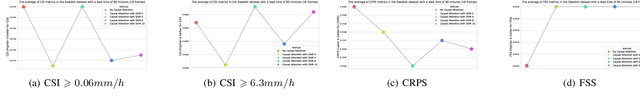

Short-term precipitation forecasting remains challenging due to the difficulty in capturing long-term spatiotemporal dependencies. Current deep learning methods fall short in establishing effective dependencies between conditions and forecast results, while also lacking interpretability. To address this issue, we propose a Precipitation Nowcasting Using Diffusion Transformer with Causal Attention model. Our model leverages Transformer and combines causal attention mechanisms to establish spatiotemporal queries between conditional information (causes) and forecast results (results). This design enables the model to effectively capture long-term dependencies, allowing forecast results to maintain strong causal relationships with input conditions over a wide range of time and space. We explore four variants of spatiotemporal information interactions for DTCA, demonstrating that global spatiotemporal labeling interactions yield the best performance. In addition, we introduce a Channel-To-Batch shift operation to further enhance the model's ability to represent complex rainfall dynamics. We conducted experiments on two datasets. Compared to state-of-the-art U-Net-based methods, our approach improved the CSI (Critical Success Index) for predicting heavy precipitation by approximately 15% and 8% respectively, achieving state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge