Practical and Bilateral Privacy-preserving Federated Learning

Paper and Code

Feb 25, 2020

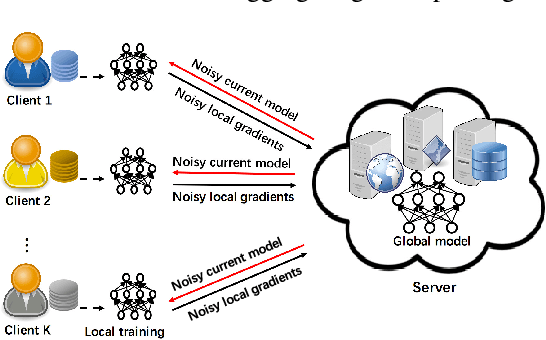

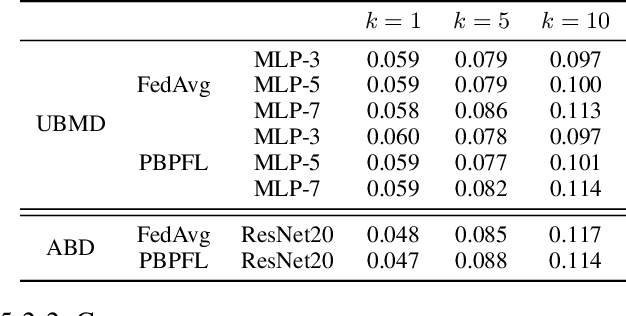

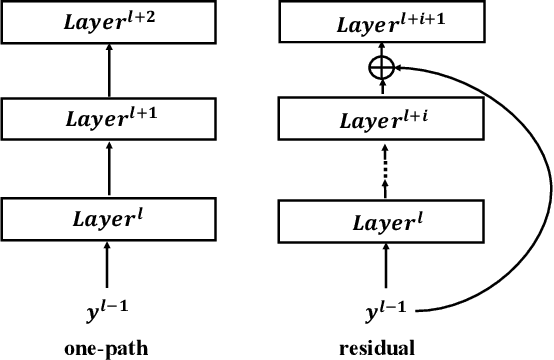

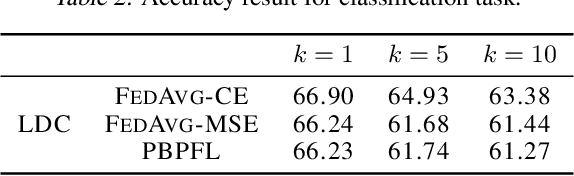

Federated learning, as an emerging distributed training model of neural networks without collecting raw data, has attracted widespread attention. However, almost all existing researches of federated learning only consider protecting the privacy of clients, but not preventing model iterates and final model parameters from leaking to untrusted clients and external attackers. In this paper, we present the first bilateral privacy-preserving federated learning scheme, which protects not only the raw training data of clients, but also model iterates during the training phase as well as final model parameters. Specifically, we present an efficient privacy-preserving technique to mask or encrypt the global model, which not only allows clients to train over the noisy global model, but also ensures only the server can obtain the exact updated model. Detailed security analysis shows that clients can access neither model iterates nor the final global model; meanwhile, the server cannot obtain raw training data of clients from additional information used for recovering the exact updated model. Finally, extensive experiments demonstrate the proposed scheme has comparable model accuracy with traditional federated learning without bringing much extra communication overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge