PoisHygiene: Detecting and Mitigating Poisoning Attacks in Neural Networks

Paper and Code

Mar 24, 2020

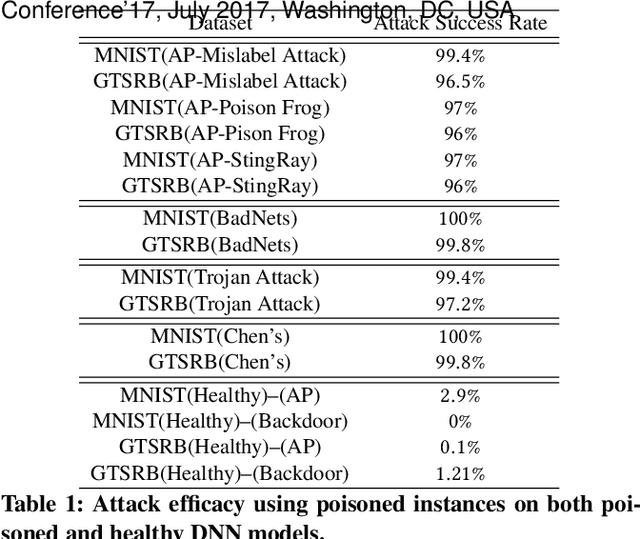

The black-box nature of deep neural networks (DNNs) facilitates attackers to manipulate the behavior of DNN through data poisoning. Being able to detect and mitigate poisoning attacks, typically categorized into backdoor and adversarial poisoning (AP), is critical in enabling safe adoption of DNNs in many application domains. Although recent works demonstrate encouraging results on detection of certain backdoor attacks, they exhibit inherent limitations which may significantly constrain the applicability. Indeed, no technique exists for detecting AP attacks, which represents a harder challenge given that such attacks exhibit no common and explicit rules while backdoor attacks do (i.e., embedding backdoor triggers into poisoned data). We believe the key to detect and mitigate AP attacks is the capability of observing and leveraging essential poisoning-induced properties within an infected DNN model. In this paper, we present PoisHygiene, the first effective and robust detection and mitigation framework against AP attacks. PoisHygiene is fundamentally motivated by Dr. Ernest Rutherford's story (i.e., the 1908 Nobel Prize winner), on observing the structure of atom through random electron sampling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge