Plug the Leaks: Advancing Audio-driven Talking Face Generation by Preventing Unintended Information Flow

Paper and Code

Jul 18, 2023

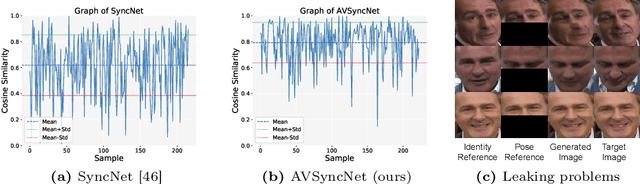

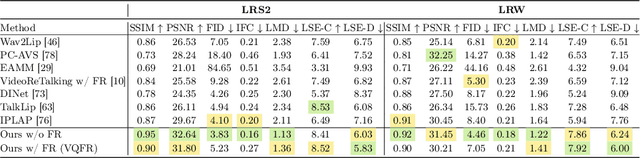

Audio-driven talking face generation is the task of creating a lip-synchronized, realistic face video from given audio and reference frames. This involves two major challenges: overall visual quality of generated images on the one hand, and audio-visual synchronization of the mouth part on the other hand. In this paper, we start by identifying several problematic aspects of synchronization methods in recent audio-driven talking face generation approaches. Specifically, this involves unintended flow of lip and pose information from the reference to the generated image, as well as instabilities during model training. Subsequently, we propose various techniques for obviating these issues: First, a silent-lip reference image generator prevents leaking of lips from the reference to the generated image. Second, an adaptive triplet loss handles the pose leaking problem. Finally, we propose a stabilized formulation of synchronization loss, circumventing aforementioned training instabilities while additionally further alleviating the lip leaking issue. Combining the individual improvements, we present state-of-the art performance on LRS2 and LRW in both synchronization and visual quality. We further validate our design in various ablation experiments, confirming the individual contributions as well as their complementary effects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge