Playing Text-Based Games with Common Sense

Paper and Code

Dec 04, 2020

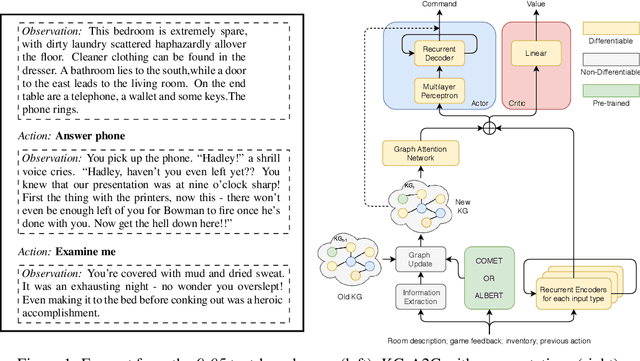

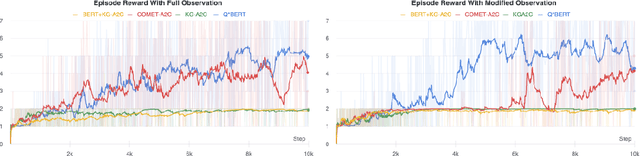

Text based games are simulations in which an agent interacts with the world purely through natural language. They typically consist of a number of puzzles interspersed with interactions with common everyday objects and locations. Deep reinforcement learning agents can learn to solve these puzzles. However, the everyday interactions with the environment, while trivial for human players, present as additional puzzles to agents. We explore two techniques for incorporating commonsense knowledge into agents. Inferring possibly hidden aspects of the world state with either a commonsense inference model COMET, or a language model BERT. Biasing an agents exploration according to common patterns recognized by a language model. We test our technique in the 9to05 game, which is an extreme version of a text based game that requires numerous interactions with common, everyday objects in common, everyday scenarios. We conclude that agents that augment their beliefs about the world state with commonsense inferences are more robust to observational errors and omissions of common elements from text descriptions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge