PIVODL: Privacy-preserving vertical federated learning over distributed labels

Paper and Code

Aug 25, 2021

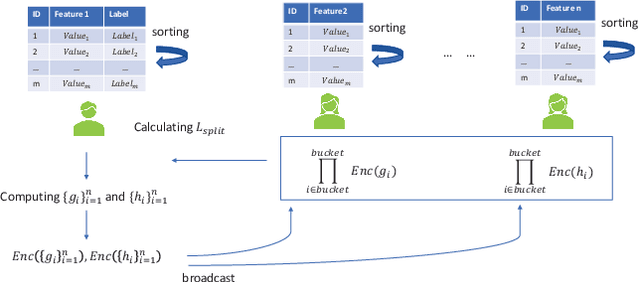

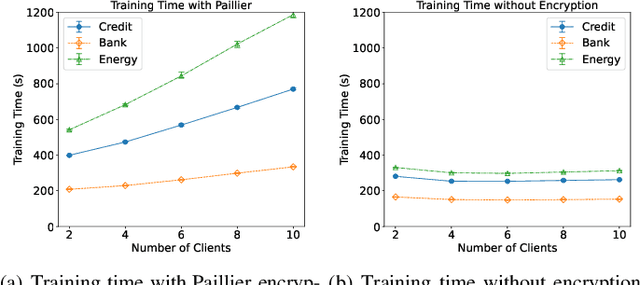

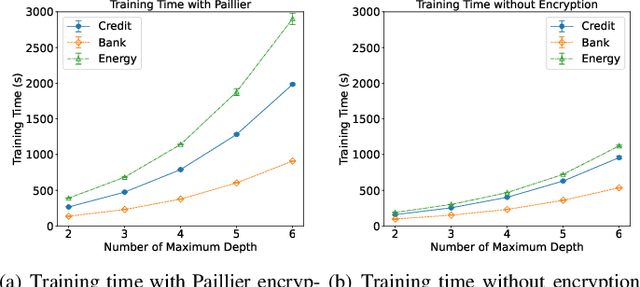

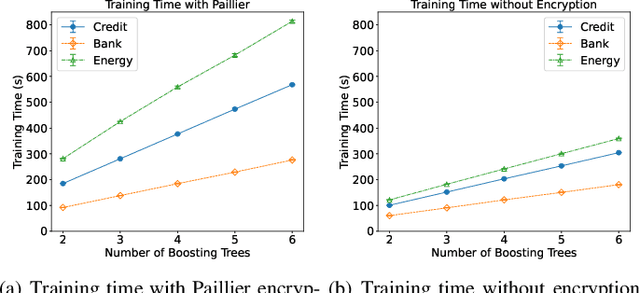

Federated learning (FL) is an emerging privacy preserving machine learning protocol that allows multiple devices to collaboratively train a shared global model without revealing their private local data. Non-parametric models like gradient boosting decision trees (GBDT) have been commonly used in FL for vertically partitioned data. However, all these studies assume that all the data labels are stored on only one client, which may be unrealistic for real-world applications. Therefore, in this work, we propose a secure vertical FL framework, named PIVODL, to train GBDT with data labels distributed on multiple devices. Both homomorphic encryption and differential privacy are adopted to prevent label information from being leaked through transmitted gradients and leaf values. Our experimental results show that both information leakage and model performance degradation of the proposed PIVODL are negligible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge