Phonemic and Graphemic Multilingual CTC Based Speech Recognition

Paper and Code

Nov 13, 2017

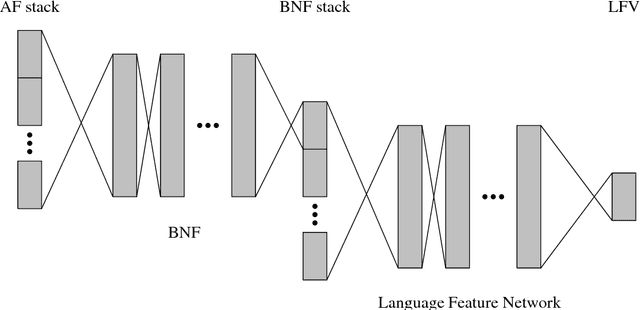

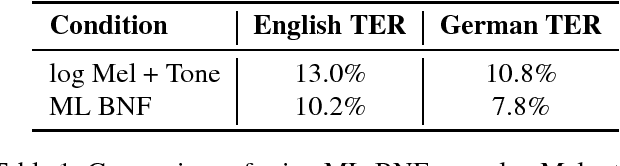

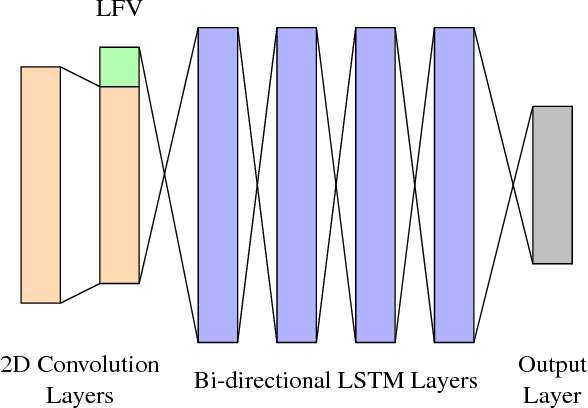

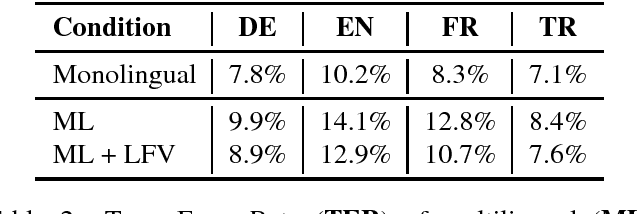

Training automatic speech recognition (ASR) systems requires large amounts of data in the target language in order to achieve good performance. Whereas large training corpora are readily available for languages like English, there exists a long tail of languages which do suffer from a lack of resources. One method to handle data sparsity is to use data from additional source languages and build a multilingual system. Recently, ASR systems based on recurrent neural networks (RNNs) trained with connectionist temporal classification (CTC) have gained substantial research interest. In this work, we extended our previous approach towards training CTC-based systems multilingually. Our systems feature a global phone set, based on the joint phone sets of each source language. We evaluated the use of different language combinations as well as the addition of Language Feature Vectors (LFVs). As contrastive experiment, we built systems based on graphemes as well. Systems having a multilingual phone set are known to suffer in performance compared to their monolingual counterparts. With our proposed approach, we could reduce the gap between these mono- and multilingual setups, using either graphemes or phonemes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge