PFGE: Parsimonious Fast Geometric Ensembling of DNNs

Paper and Code

Feb 23, 2022

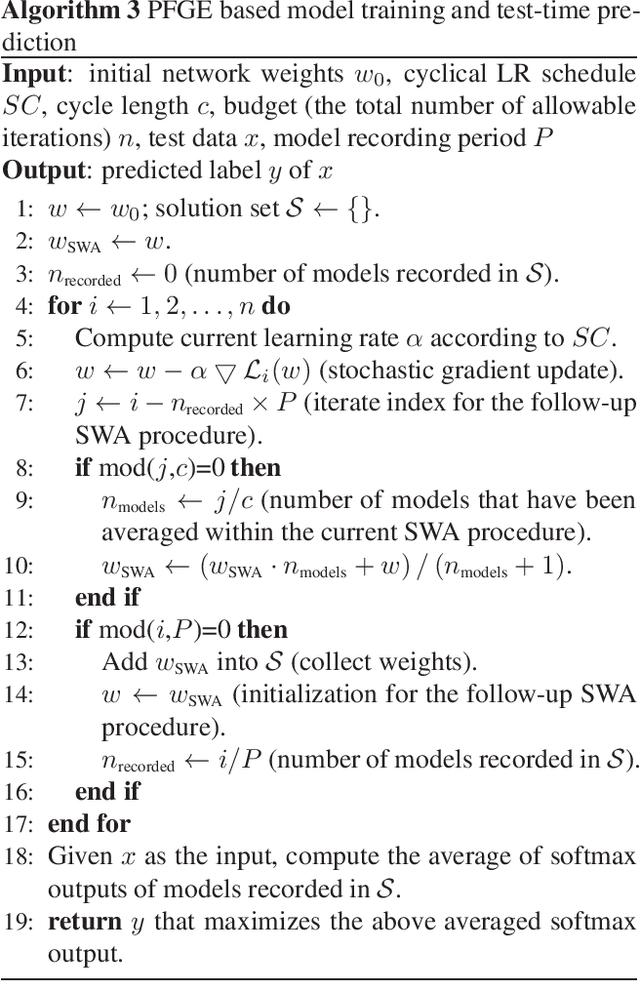

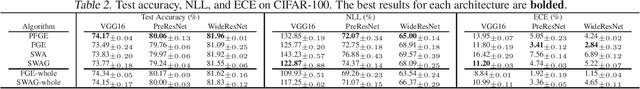

Ensemble methods have been widely used to improve the performance of machine learning methods in terms of generalization and uncertainty calibration, while they struggle to use in deep learning systems, as training an ensemble of deep neural networks (DNNs) and then deploying them for online prediction incur an extremely higher computational overhead of model training and test-time predictions. Recently, several advanced techniques, such as fast geometric ensembling (FGE) and snapshot ensemble, have been proposed. These methods can train the model ensembles in the same time as a single model, thus getting around the hurdle of training time. However, their overhead of model recording and test-time computations remains much higher than their single model based counterparts. Here we propose a parsimonious FGE (PFGE) that employs a lightweight ensemble of higher-performing DNNs generated by several successively-performed procedures of stochastic weight averaging. Experimental results across different advanced DNN architectures on different datasets, namely CIFAR-$\{$10,100$\}$ and Imagenet, demonstrate its performance. Results show that, compared with state-of-the-art methods, PFGE achieves better generalization performance and satisfactory calibration capability, while the overhead of model recording and test-time predictions is significantly reduced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge