PeSOTIF: a Challenging Visual Dataset for Perception SOTIF Problems in Long-tail Traffic Scenarios

Paper and Code

Nov 07, 2022

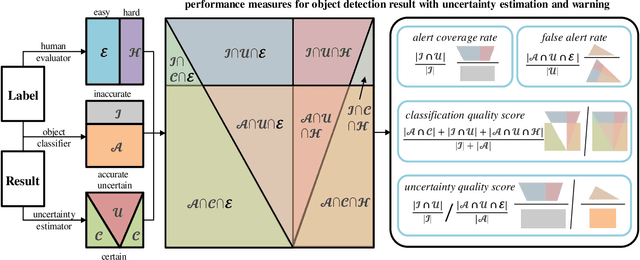

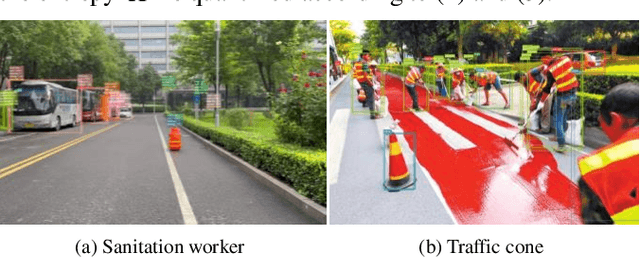

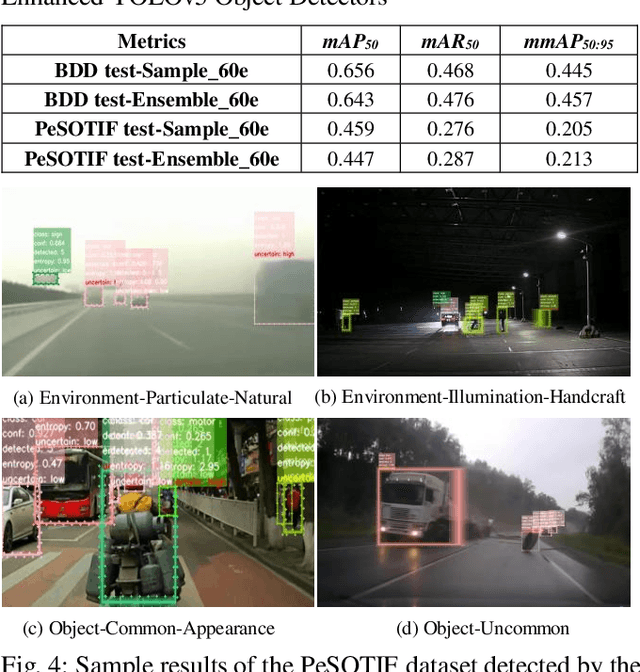

Perception algorithms in autonomous driving systems confront great challenges in long-tail traffic scenarios, where the problems of Safety of the Intended Functionality (SOTIF) could be triggered by the algorithm performance insufficiencies and dynamic operational environment. However, such scenarios are not systematically included in current open-source datasets, and this paper fills the gap accordingly. Based on the analysis and enumeration of trigger conditions, a high-quality diverse dataset is released, including various long-tail traffic scenarios collected from multiple resources. Considering the development of probabilistic object detection (POD), this dataset marks trigger sources that may cause perception SOTIF problems in the scenarios as key objects. In addition, an evaluation protocol is suggested to verify the effectiveness of POD algorithms in identifying the key objects via uncertainty. The dataset never stops expanding, and the first batch of open-source data includes 1126 frames with an average of 2.27 key objects and 2.47 normal objects in each frame. To demonstrate how to use this dataset for SOTIF research, this paper further quantifies the perception SOTIF entropy to confirm whether a scenario is unknown and unsafe for a perception system. The experimental results show that the quantified entropy can effectively and efficiently reflect the failure of the perception algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge