Person Re-identification via Attention Pyramid

Paper and Code

Aug 11, 2021

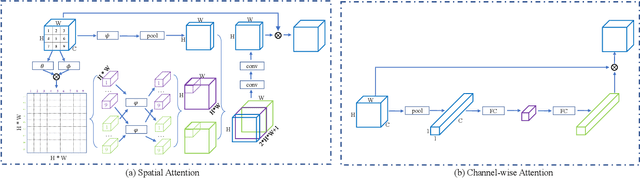

In this paper, we propose an attention pyramid method for person re-identification. Unlike conventional attention-based methods which only learn a global attention map, our attention pyramid exploits the attention regions in a multi-scale manner because human attention varies with different scales. Our attention pyramid imitates the process of human visual perception which tends to notice the foreground person over the cluttered background, and further focus on the specific color of the shirt with close observation. Specifically, we describe our attention pyramid by a "split-attend-merge-stack" principle. We first split the features into multiple local parts and learn the corresponding attentions. Then, we merge local attentions and stack these merged attentions with the residual connection as an attention pyramid. The proposed attention pyramid is a lightweight plug-and-play module that can be applied to off-the-shelf models. We implement our attention pyramid method in two different attention mechanisms including channel-wise attention and spatial attention. We evaluate our method on four largescale person re-identification benchmarks including Market-1501, DukeMTMC, CUHK03, and MSMT17. Experimental results demonstrate the superiority of our method, which outperforms the state-of-the-art methods by a large margin with limited computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge