Performing Co-Membership Attacks Against Deep Generative Models

Paper and Code

Oct 04, 2018

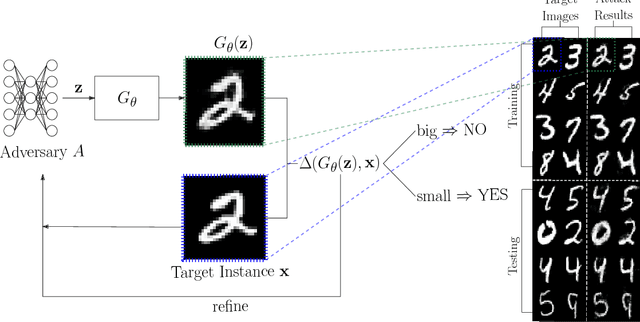

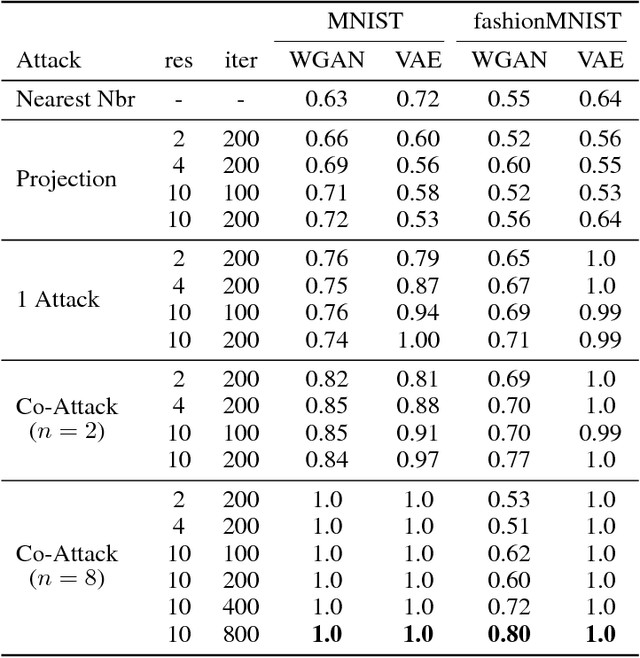

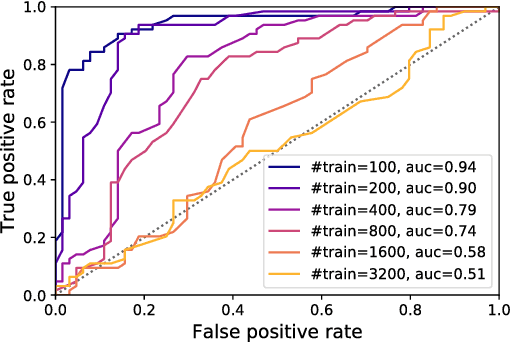

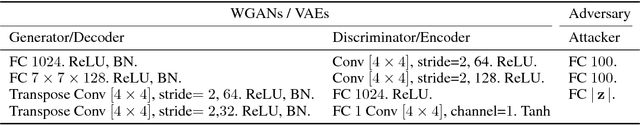

In this paper we propose new membership attacks and new attack methods against deep generative models including Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs). Specifically, a membership attack is to check whether a given instance x was used in the training data or not. And a co-membership attack is to check whether the given bundle n instances were in the training, with the prior knowledge that the bundle was either entirely used in the training or none at all. Successful membership attacks can compromise privacy of training data when the generative model is published. Our main idea is to cast membership inference of target data x as the optimization of another neural network (called the attacker network) to search for the seed to reproduce x. The final reconstruction error is used directly to conclude whether x is in the training data or not. We show through experiments on a variety of data sets and a suite of training parameters that our attacker network can be more successful than prior membership attacks; co-membership attack can be more powerful than single attacks; and VAEs are more susceptible to membership attacks compared to GANs in general. We also discussed membership attack with model generalization, overfitting, and diversity of the model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge