Performance Prediction Under Dataset Shift

Paper and Code

Jun 21, 2022

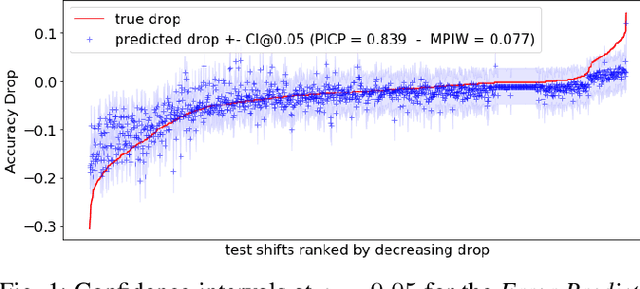

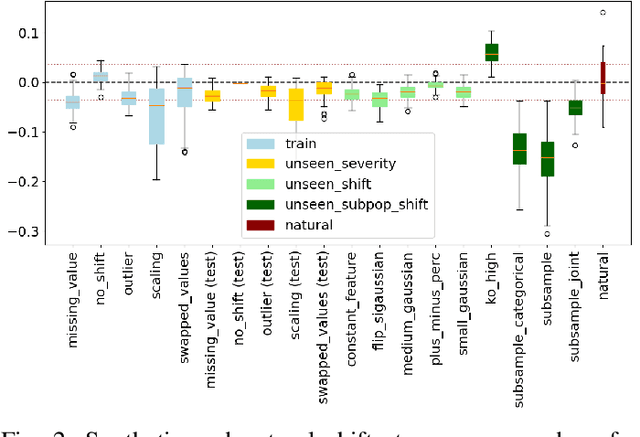

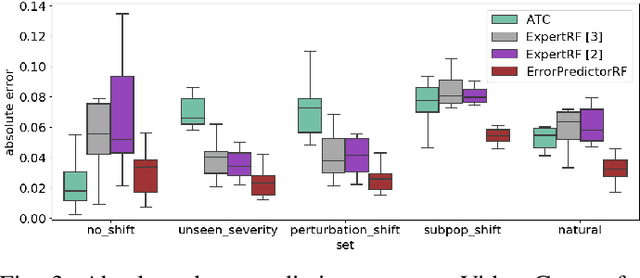

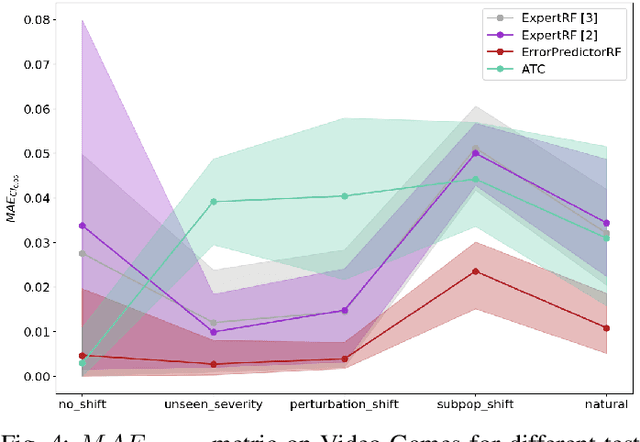

ML models deployed in production often have to face unknown domain changes, fundamentally different from their training settings. Performance prediction models carry out the crucial task of measuring the impact of these changes on model performance. We study the generalization capabilities of various performance prediction models to new domains by learning on generated synthetic perturbations. Empirical validation on a benchmark of ten tabular datasets shows that models based upon state-of-the-art shift detection metrics are not expressive enough to generalize to unseen domains, while Error Predictors bring a consistent improvement in performance prediction under shift. We additionally propose a natural and effortless uncertainty estimation of the predicted accuracy that ensures reliable use of performance predictors. Our implementation is available at https: //github.com/dataiku-research/performance_prediction_under_shift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge