Perceptual Adversarial Robustness: Defense Against Unseen Threat Models

Paper and Code

Jun 22, 2020

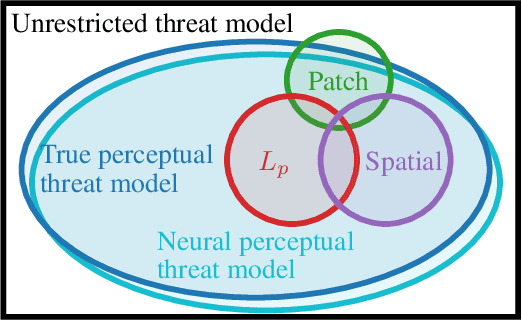

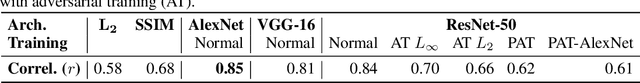

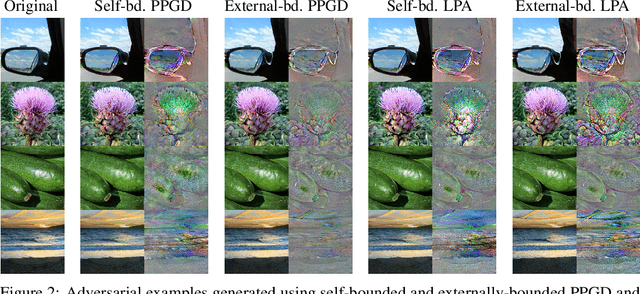

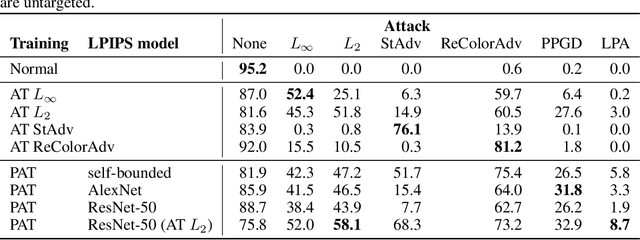

We present adversarial attacks and defenses for the perceptual adversarial threat model: the set of all perturbations to natural images which can mislead a classifier but are imperceptible to human eyes. The perceptual threat model is broad and encompasses $L_2$, $L_\infty$, spatial, and many other existing adversarial threat models. However, it is difficult to determine if an arbitrary perturbation is imperceptible without humans in the loop. To solve this issue, we propose to use a {\it neural perceptual distance}, an approximation of the true perceptual distance between images using internal activations of neural networks. In particular, we use the Learned Perceptual Image Patch Similarity (LPIPS) distance. We then propose the {\it neural perceptual threat model} that includes adversarial examples with a bounded neural perceptual distance to natural images. Under the neural perceptual threat model, we develop two novel perceptual adversarial attacks to find any imperceptible perturbations to images which can fool a classifier. Through an extensive perceptual study, we show that the LPIPS distance correlates well with human judgements of perceptibility of adversarial examples, validating our threat model. Because the LPIPS threat model is very broad, we find that Perceptual Adversarial Training (PAT) against a perceptual attack gives robustness against many other types of adversarial attacks. We test PAT on CIFAR-10 and ImageNet-100 against 12 types of adversarial attacks and find that, for each attack, PAT achieves close to the accuracy of adversarial training against just that perturbation type. That is, PAT generalizes well to unforeseen perturbation types. This is vital in sensitive applications where a particular threat model cannot be assumed, and to the best of our knowledge, PAT is the first adversarial defense with this property.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge