Peepers & Pixels: Human Recognition Accuracy on Low Resolution Faces

Paper and Code

Mar 25, 2025

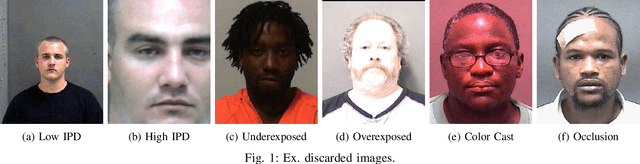

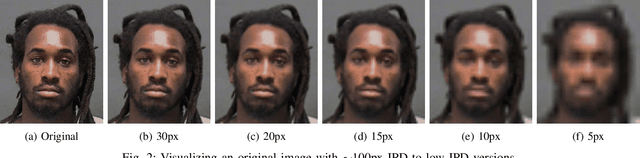

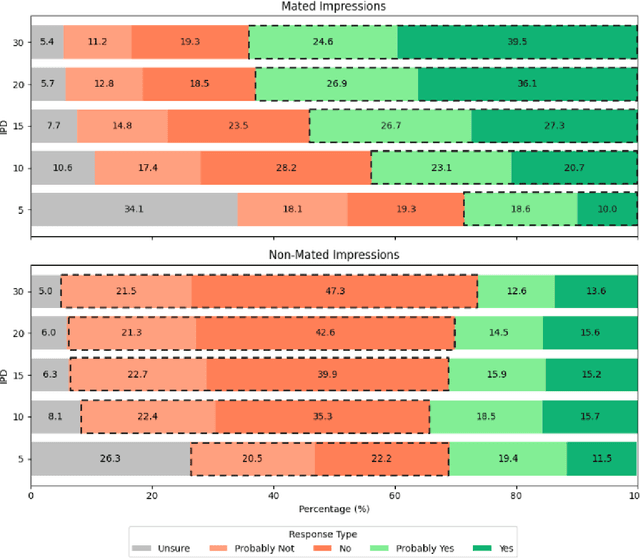

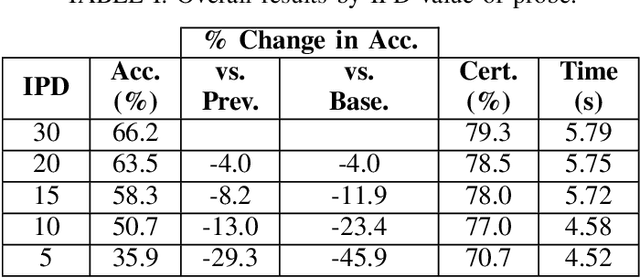

Automated one-to-many (1:N) face recognition is a powerful investigative tool commonly used by law enforcement agencies. In this context, potential matches resulting from automated 1:N recognition are reviewed by human examiners prior to possible use as investigative leads. While automated 1:N recognition can achieve near-perfect accuracy under ideal imaging conditions, operational scenarios may necessitate the use of surveillance imagery, which is often degraded in various quality dimensions. One important quality dimension is image resolution, typically quantified by the number of pixels on the face. The common metric for this is inter-pupillary distance (IPD), which measures the number of pixels between the pupils. Low IPD is known to degrade the accuracy of automated face recognition. However, the threshold IPD for reliability in human face recognition remains undefined. This study aims to explore the boundaries of human recognition accuracy by systematically testing accuracy across a range of IPD values. We find that at low IPDs (10px, 5px), human accuracy is at or below chance levels (50.7%, 35.9%), even as confidence in decision-making remains relatively high (77%, 70.7%). Our findings indicate that, for low IPD images, human recognition ability could be a limiting factor to overall system accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge