PDE-based Group Equivariant Convolutional Neural Networks

Paper and Code

Jan 24, 2020

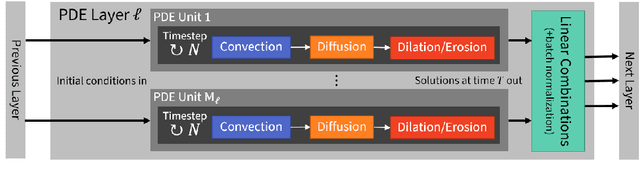

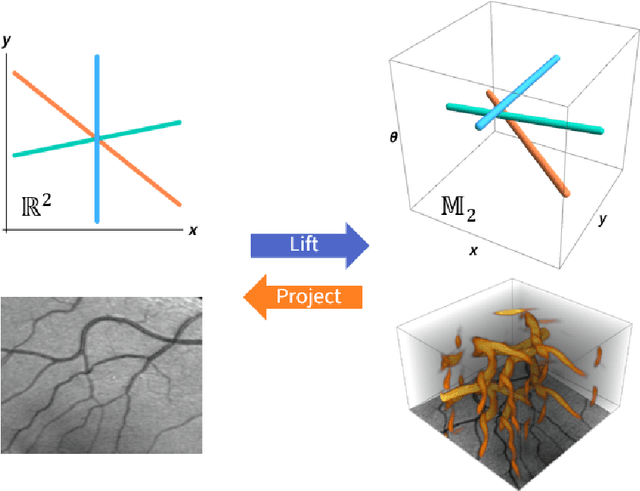

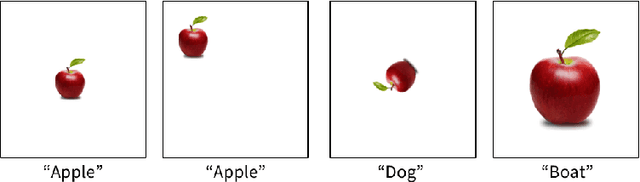

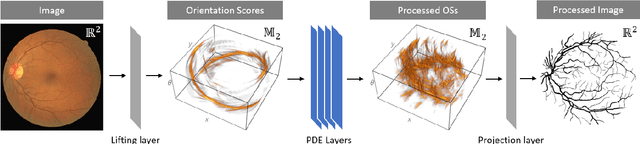

We present a PDE-based framework that generalizes Group equivariant Convolutional Neural Networks (G-CNNs). In this framework, a network layer is seen as a set of PDE-solvers where the equation's geometrically meaningful coefficients become the layer's trainable weights. Formulating our PDEs on homogeneous spaces allows these networks to be designed with built-in symmetries such as rotation equivariance instead of being restricted to just translation equivariance as in traditional CNNs. Having all the desired symmetries included in the design obviates the need to include them by means of costly techniques such as data augmentation. Roto-translation equivariance for image analysis applications is the example we will be using throughout the paper. Our default PDE is solved by a combination of linear group convolutions and non-linear morphological group convolutions. Just like for linear convolution a morphological convolution is specified by a kernel and this kernel is what is being optimized during the training process. We demonstrate how the common CNN operations of max/min-pooling and ReLUs arise naturally from solving a PDE and how they are subsumed by morphological convolutions. We present a proof-of-concept experiment to demonstrate the potential of this framework in increasing the performance of deep learning based imaging applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge