Parzen Filters for Spectral Decomposition of Signals

Paper and Code

Jun 23, 2019

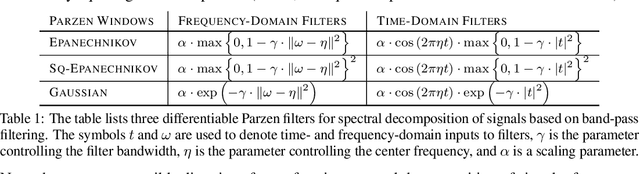

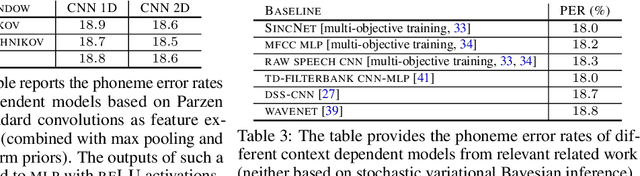

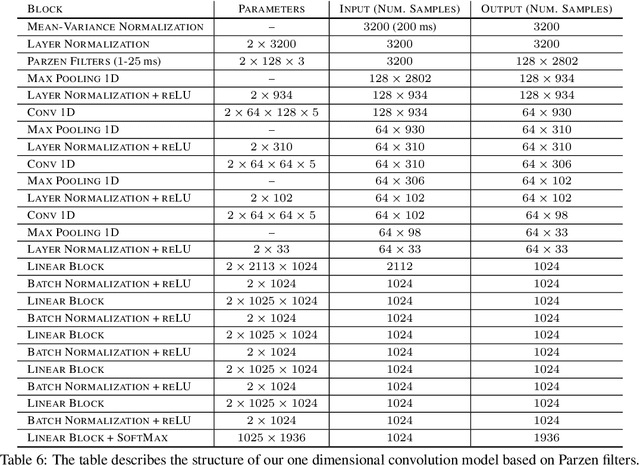

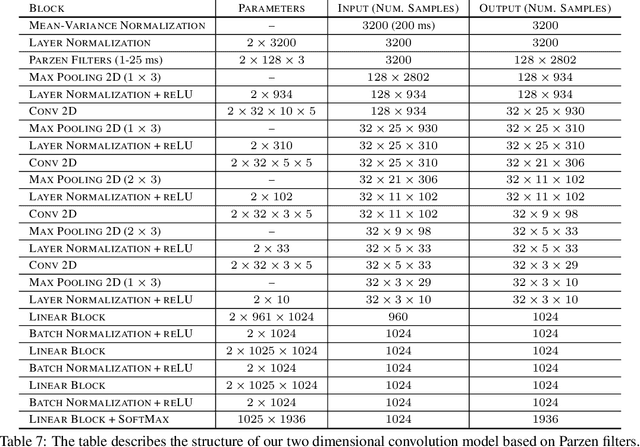

We propose a novel family of band-pass filters for efficient spectral decomposition of signals. Previous work has already established the effectiveness of representations based on static band-pass filtering of speech signals (e.g., mel-frequency cepstral coefficients and deep scattering spectrum). A potential shortcoming of these approaches is the fact that the parameters specifying such a representation are fixed a priori and not learned using the available data. To address this limitation, we propose a family of filters defined via cosine modulations of Parzen windows, where the modulation frequency models the center of a spectral band-pass filter and the length of a Parzen window is inversely proportional to the filter width in the spectral domain. We propose to learn such a representation using stochastic variational Bayesian inference based on Gaussian dropout posteriors and sparsity inducing priors. Such a prior leads to an intractable integral defining the Kullback--Leibler divergence term for which we propose an effective approximation based on the Gauss--Hermite quadrature. Our empirical results demonstrate that the proposed approach is competitive with state-of-the-art models on speech recognition tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge