Particle Filter SLAM for Vehicle Localization

Paper and Code

Feb 20, 2024

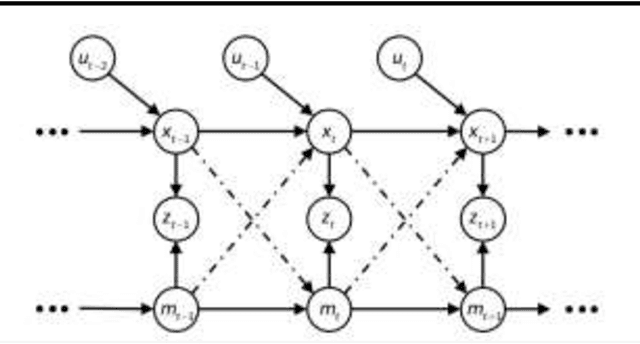

Simultaneous Localization and Mapping (SLAM) presents a formidable challenge in robotics, involving the dynamic construction of a map while concurrently determining the precise location of the robotic agent within an unfamiliar environment. This intricate task is further compounded by the inherent "chicken-and-egg" dilemma, where accurate mapping relies on a dependable estimation of the robot's location, and vice versa. Moreover, the computational intensity of SLAM adds an additional layer of complexity, making it a crucial yet demanding topic in the field. In our research, we address the challenges of SLAM by adopting the Particle Filter SLAM method. Our approach leverages encoded data and fiber optic gyro (FOG) information to enable precise estimation of vehicle motion, while lidar technology contributes to environmental perception by providing detailed insights into surrounding obstacles. The integration of these data streams culminates in the establishment of a Particle Filter SLAM framework, representing a key endeavor in this paper to effectively navigate and overcome the complexities associated with simultaneous localization and mapping in robotic systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge