Partial to Whole Knowledge Distillation: Progressive Distilling Decomposed Knowledge Boosts Student Better

Paper and Code

Sep 26, 2021

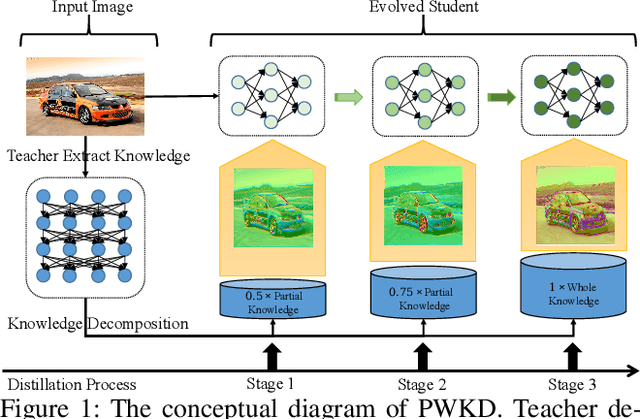

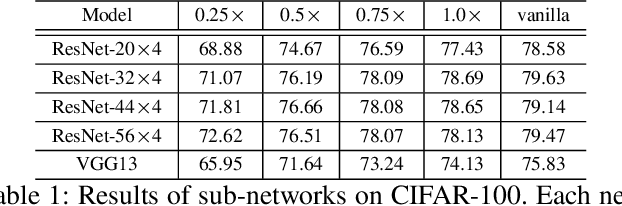

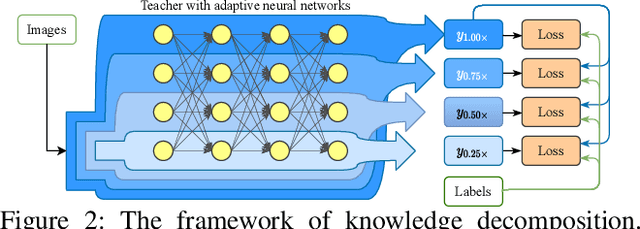

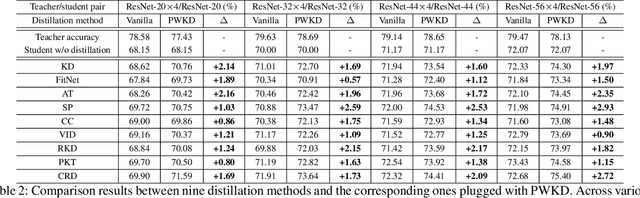

Knowledge distillation field delicately designs various types of knowledge to shrink the performance gap between compact student and large-scale teacher. These existing distillation approaches simply focus on the improvement of \textit{knowledge quality}, but ignore the significant influence of \textit{knowledge quantity} on the distillation procedure. Opposed to the conventional distillation approaches, which extract knowledge from a fixed teacher computation graph, this paper explores a non-negligible research direction from a novel perspective of \textit{knowledge quantity} to further improve the efficacy of knowledge distillation. We introduce a new concept of knowledge decomposition, and further put forward the \textbf{P}artial to \textbf{W}hole \textbf{K}nowledge \textbf{D}istillation~(\textbf{PWKD}) paradigm. Specifically, we reconstruct teacher into weight-sharing sub-networks with same depth but increasing channel width, and train sub-networks jointly to obtain decomposed knowledge~(sub-networks with more channels represent more knowledge). Then, student extract partial to whole knowledge from the pre-trained teacher within multiple training stages where cyclic learning rate is leveraged to accelerate convergence. Generally, \textbf{PWKD} can be regarded as a plugin to be compatible with existing offline knowledge distillation approaches. To verify the effectiveness of \textbf{PWKD}, we conduct experiments on two benchmark datasets:~CIFAR-100 and ImageNet, and comprehensive evaluation results reveal that \textbf{PWKD} consistently improve existing knowledge distillation approaches without bells and whistles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge