Parameters Estimation for the Cosmic Microwave Background with Bayesian Neural Networks

Paper and Code

Dec 23, 2019

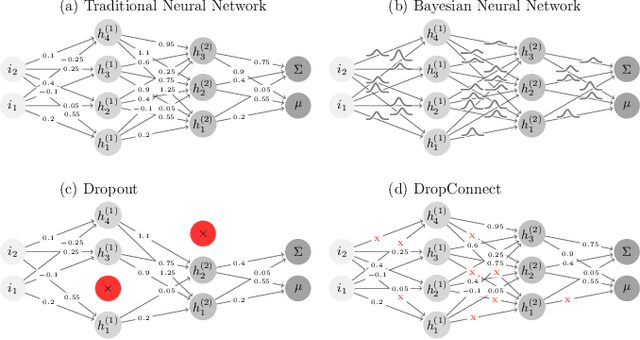

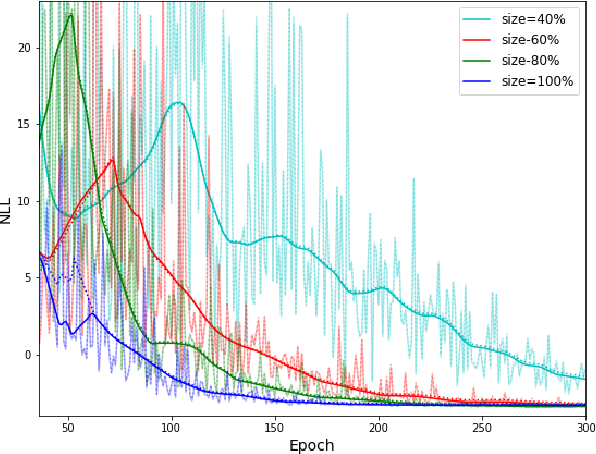

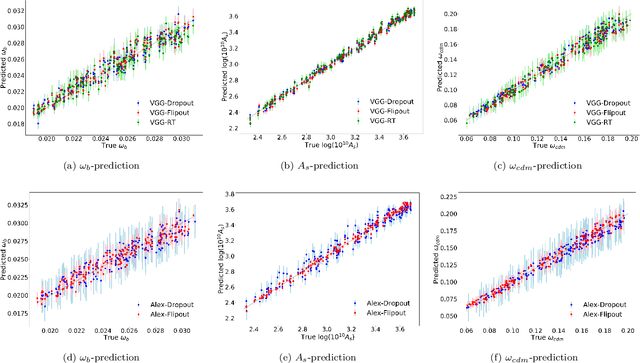

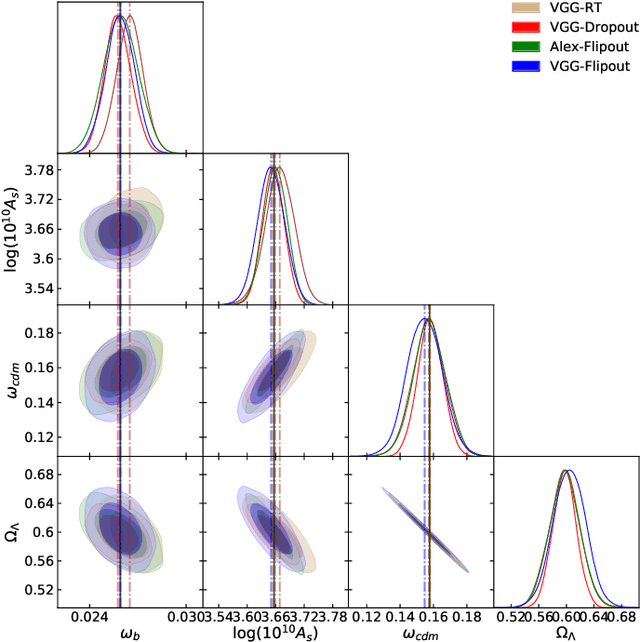

In this paper, we present the first study that compares different models of Bayesian Neural Networks (BNNs) to predict the posterior distribution of the cosmological parameters directly from the Cosmic Microwave Background temperature and polarization maps. We focus our analysis on four different methods to sample the weights of the network during training: Dropout, DropConnect, Reparameterization Trick (RT), and Flipout. We find out that Flipout outperforms all other methods regardless of the architecture used, and provides tighter constraints for the cosmological parameters. Additionally, we describe existing strategies for calibrating the networks and propose new ones. We show how tuning the regularization parameter for the scale of the approximate posterior on the weights in Flipout and RT we can produce unbiased and reliable uncertainty estimates, i.e., the regularizer acts as a hyper parameter analogous to the dropout rate in Dropout. The best performances are nevertheless achieved with a more convenient method, in which the network parameters are let free during training to achieve the best uncalibrated performances, and then the confidence intervals are calibrated in a subsequent phase. Furthermore, we claim that the correct calibration of these networks does not change the behavior for the aleatoric and epistemic uncertainties provided for BNNs when the size of the training dataset changes. The results reported in the paper can be extended to other cosmological datasets in order to capture features that can be extracted directly from the raw data, such as non-Gaussianity or foreground emissions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge