Parameter-Free Spatial Attention Network for Person Re-Identification

Paper and Code

Nov 29, 2018

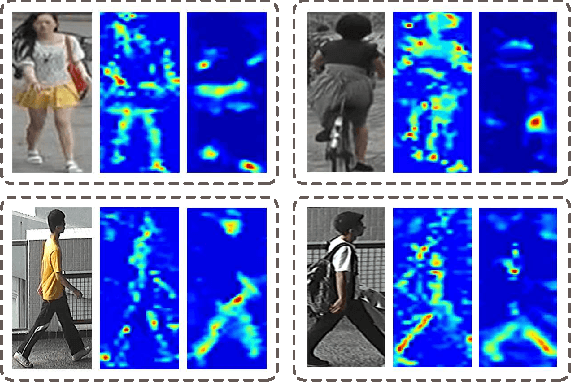

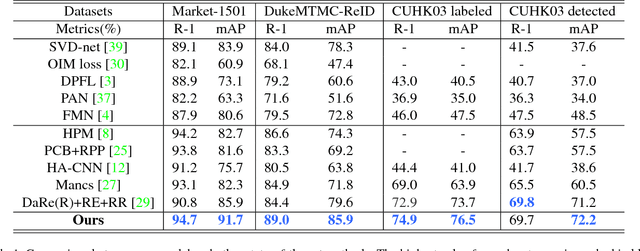

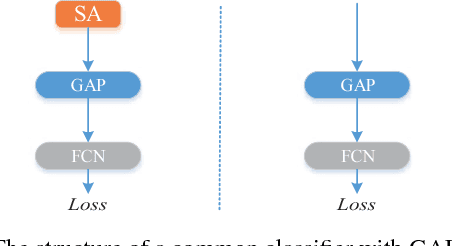

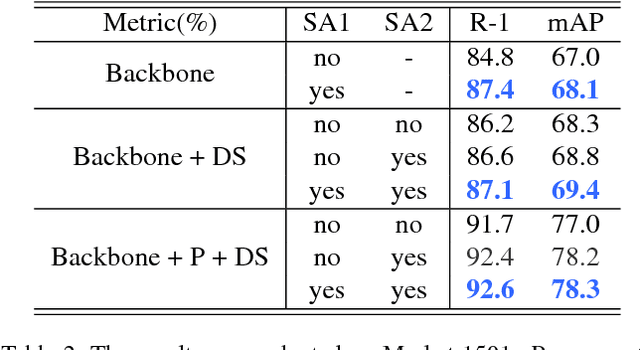

Global average pooling (GAP) allows to localize discriminative information for recognition [40]. While GAP helps the convolution neural network to attend to the most discriminative features of an object, it may suffer if that information is missing e.g. due to camera viewpoint changes. To circumvent this issue, we argue that it is advantageous to attend to the global configuration of the object by modeling spatial relations among high-level features. We propose a novel architecture for Person Re-Identification, based on a novel parameter-free spatial attention layer introducing spatial relations among the feature map activations back to the model. Our spatial attention layer consistently improves the performance over the model without it. Results on four benchmarks demonstrate a superiority of our model over the state-of-the-art achieving rank-1 accuracy of 94.7% on Market-1501, 89.0% on DukeMTMC-ReID, 74.9% on CUHK03-labeled and 69.7% on CUHK03-detected.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge