Parameter Convex Neural Networks

Paper and Code

Jun 11, 2022

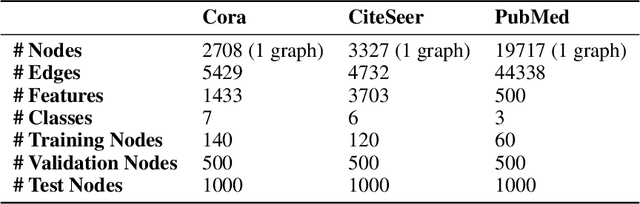

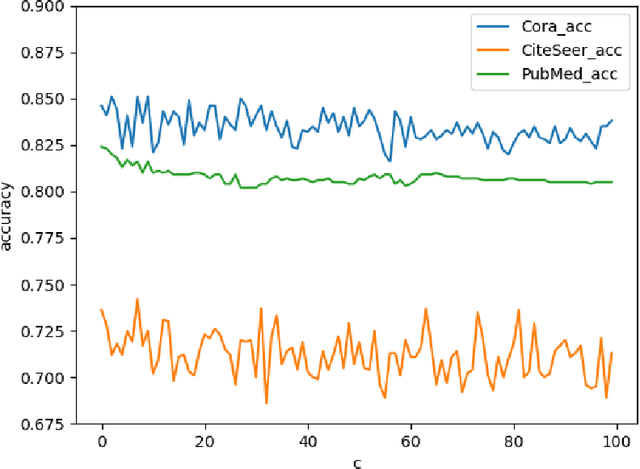

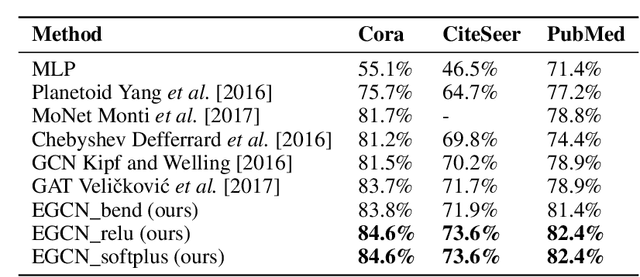

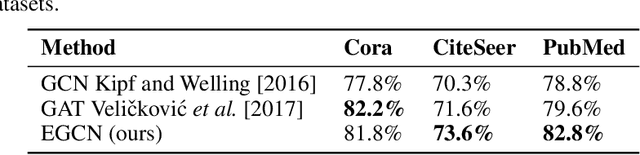

Deep learning utilizing deep neural networks (DNNs) has achieved a lot of success recently in many important areas such as computer vision, natural language processing, and recommendation systems. The lack of convexity for DNNs has been seen as a major disadvantage of many optimization methods, such as stochastic gradient descent, which greatly reduces the genelization of neural network applications. We realize that the convexity make sense in the neural network and propose the exponential multilayer neural network (EMLP), a class of parameter convex neural network (PCNN) which is convex with regard to the parameters of the neural network under some conditions that can be realized. Besides, we propose the convexity metric for the two-layer EGCN and test the accuracy when the convexity metric changes. For late experiments, we use the same architecture to make the exponential graph convolutional network (EGCN) and do the experiment on the graph classificaion dataset in which our model EGCN performs better than the graph convolutional network (GCN) and the graph attention network (GAT).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge